Collection of Big Model Daily on October 30

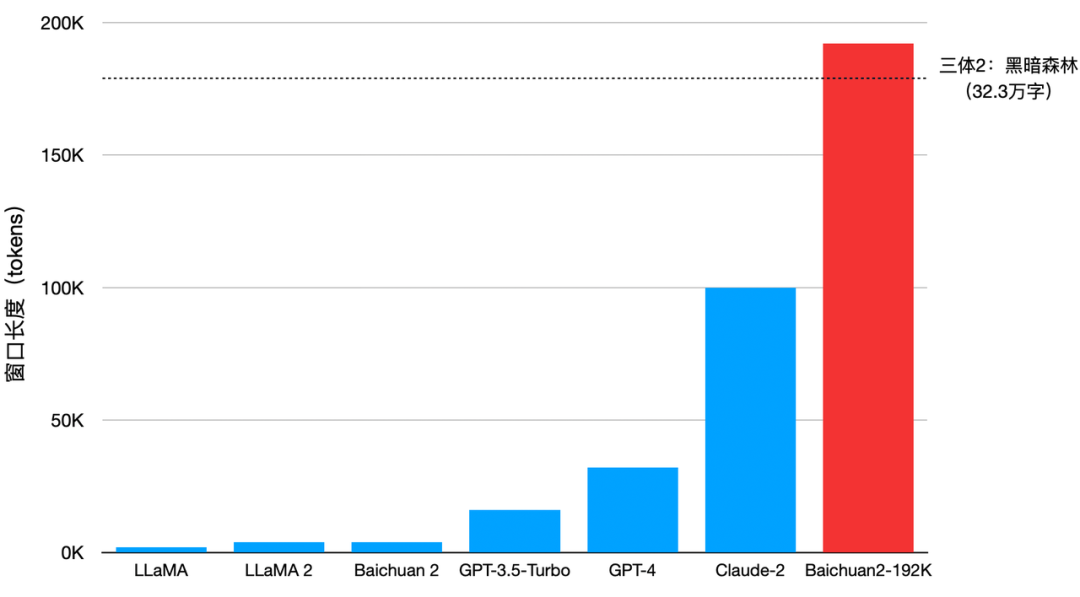

[Collection of Big Model Daily on October 30] The Turing Award winners are arguing, LeCun: The AI extinction theory of Bengio, Hinton, etc. is absurd; the most powerful open source big model in Chinese is here! 13 billion parameters, 0 threshold for commercial use, from Kunlun Wanwei; Baichuan Intelligent launched the world’s longest context window large model Baichuan2-192K, which can input 350,000 words at a time, surpassing Claude2; an interpretable nucleotide language model based on Transformer and attention, using Optimized design for pegRNA

The Turing Award winners are arguing, LeCun: The AI extinction theory of Bengio, Hinton, etc. is absurd

Link: https://news.miracleplus.com/share_link/11280

Regarding the issue of AI risks, bigwigs from all walks of life also have different opinions. Someone took the lead in signing a joint letter calling on AI laboratories to immediately suspend research. The three giants of deep learning, Geoffrey Hinton, Yoshua Bengio, etc. all support this view. Just in recent days, Bengio, Hinton and others issued a joint letter “Managing Artificial Intelligence Risks in an Era of Rapid Development”, calling on researchers to take urgent governance measures and focus on safety and ethical practices before developing AI systems. Governments should take action to manage the risks posed by AI. The article mentions some urgent governance measures, such as involving national institutions to prevent the misuse of AI. To achieve effective regulation, governments need to have a comprehensive understanding of AI developments. Regulators should take a series of measures, such as model registration, effective protection of whistleblowers, and monitoring of model development and supercomputer use. Regulators will also need access to advanced AI systems before deployment to assess their dangerous capabilities.

The most powerful open source large model in Chinese is here! 13 billion parameters, zero threshold for commercial use, from Kunlun Wanwei

Link: https://news.miracleplus.com/share_link/11281

The most thorough open source large model is here – 13 billion parameters, no need to apply for commercial use. Not only that, it also comes with one of the world’s largest Chinese data sets open source: 600G, 150 billion tokens! This is the Skywork-13B series from Kunlun Wanwei, which includes two major versions: Skywork-13B-Base: the basic model of this series, the one that has come out on top in various benchmarks. Skywork-13B-Math: The mathematical model of this series, its mathematical ability scored first in the GSM8K evaluation. In major authoritative evaluation benchmarks, such as C-Eval, MMLU, CMMLU, and GSM8K, it can be seen that Skywork-13B is at the forefront of Chinese open source models and is the optimal level under the same parameter scale.

Baichuan Intelligent launches Baichuan2-192K, the world’s longest context window model, which can input 350,000 words at a time, surpassing Claude2

Link: https://news.miracleplus.com/share_link/11282

On October 30, Baichuan Intelligent released the Baichuan2-192K large model, with a context window length of up to 192K, which is currently the longest context window in the world. Baichuan2-192K can process about 350,000 Chinese characters at a time, which is 4.4 times that of Claude2, the best large model that currently supports long context windows (supports 100K context windows, measured about 80,000 characters), and is even more powerful than GPT-4 (supports 32K context windows, measured 14 times that of about 25,000 words).

ChatGPT evolves again! All in One

Link: https://news.miracleplus.com/share_link/11283

ChatGPT was quietly updated overnight, and many entrepreneurial projects were about to be launched! Now, it not only supports uploading PDF and other files you want to analyze. It can also automatically switch to use various tools in one conversation. Dall·E, browser, data analysis, etc. can be used in one go. This update made many people exclaim: Many entrepreneurial projects died today.

Alibaba Tongyi Qianwen Big Model App is launched: supports AI Q&A, creative copywriting and other functions

Link: https://news.miracleplus.com/share_link/11285

Recently, Alibaba Cloud’s large-scale model Tongyi Qianwen App has been put on the shelves of major Android application markets. The version number is 1.0.2 and the installation package size is 40.95MB. The application introduction shows that Tongyi Qianwen is a very large-scale pre-trained model that can provide users with comprehensive assistance in creative copywriting, office assistants, learning assistants, interesting life and other aspects. According to reports, Tongyi Qianwen App can provide functions such as Xiaohongshu copywriting generation, script creation, rewriting and polishing in terms of creative copywriting; the office assistant can provide code generation, code explanation, weekly report expansion, etc.; the learning assistant can provide Chinese-English interaction Translation, math questions, classical Chinese translation and other functions; fun questions and answers support high emotional intelligence responses, rainbow farts, fitness plans, etc.

Do math theorem proving questions like building Lego, GPT-3.5 proves that the success rate reaches a new SOTA

Link: https://news.miracleplus.com/share_link/11286

Mathematicians are armed with more powerful tools and a deeper understanding of more complex problems, ultimately able to solve previously unsolvable and difficult problems. In order to solve this problem and simulate the iterative process of decomposing complex problems, citing existing knowledge, and accumulating successfully proved new theorems that human mathematicians usually perform when proving theorems, researchers from Sun Yat-sen University, Huawei and other institutions proposed LEGO -Prover realizes the entire closed-loop process of generating, sorting, storing, retrieving and reusing mathematical theorems. LEGO-Prover enables GPT-3.5 to achieve new results on both the formal theorem proving data set miniF2F-valid (the proof success rate increases from 48.0% to 57.0%) and miniF2F-test (the proof success rate increases from 45.5% to 50.0%). SOTA. During the proof process, LEGO-Prover also managed to generate more than 20,000 lemmas and add them to the growing library of theorems. Ablation studies show that these newly added skills are indeed helpful in proving theorems, with the proof success rate on miniF2F-valid increasing from 47.1% to 50.4%.

Interpretable nucleotide language model based on Transformer and attention for pegRNA optimization design

Link: https://news.miracleplus.com/share_link/11287

Gene editing is an emerging and relatively precise genetic engineering technology that can modify specific target genes in the genome of an organism. Prime editor (PE) is a precision gene editing system developed by the team of Chinese-American scientist David R. Liu. PE is a promising gene editing tool, but due to the lack of accurate and widely applicable methods, Effectively optimizing prime editing guide RNA (pegRNA) design remains a challenge. Recently, a multidisciplinary and multi-institutional research team from Chongqing Medical University, Northwest Agriculture and Forestry University, Yunnan University for Nationalities, Zhejiang University School of Medicine, and the Bioinformatics Center of AMMS, Institute of Mathematics and Systems Sciences, Chinese Academy of Sciences, developed an optimized Optimized Prime Editing Design (OPED), an interpretable nucleotide language model that uses transfer learning to improve its accuracy and versatility, is used to predict pegRNA efficiency and design optimization.