Collection of Big Model Daily on November 2

[Collection of Big Model Daily on November 2] Jen-Hsun Huang: In just 2 years, NVIDIA and even the entire industry will be completely different; with the blessing of the Silicon Immortal, can Tenstorrent compete with NVIDIA? Former AMD executive talks chip competition and trends; Instagram version of “Character.ai” is coming

Huang Renxun: In just 2 years, NVIDIA and even the entire industry will be completely different

Link: https://news.miracleplus.com/share_link/11378

Huang Renxun made a prediction about the future: computing technology will advance a million times every decade. In just 2 years, NVIDIA and even the entire industry will be completely different. The basis for this judgment is that for the first time in 60 years, two technological transformations are happening at the same time: AI and computing. This statement comes from Lao Huang’s latest interview with Ryan Patel, host of HP’s The Moment program. On the show, Huang also revealed something surprising: it was not his own idea to wear leather clothes all the time. Since 2013, leather clothing has been almost tied to Huang’s image. Even his self-introduction on the Reddit forum is “Nvidia CEO, the man who wears leather clothing and repeats his words three times.”

With the blessing of the Silicon Immortal, can Tenstorrent compete with Nvidia? Former AMD executive talks chip competition and trends

Link: https://news.miracleplus.com/share_link/11379

David Bennett is a former AMD and Lenovo executive and currently serves as the COO (chief customer officer) of Tenstorrent. In the latest foreign media interview, Bennett believes that future chips will combine GPU and CPU to prepare and load data faster. , thereby accelerating the model training process, which we can see from the release of Apple’s M series 3 years ago. Speaking of Apple, in June we also shared the insights of Silicon Immortal Jim Keller as the CEO of Tenstorrent on AI chips and market competition. Keller is a hardware legend who developed Apple’s A4 and A5 processors and worked on them from 2016 to 2018. Participated in Tesla’s autonomous driving hardware work. Tenstorrent has raised more than $300 million from investors, including Fidelity Ventures and Hyundai Motor Group, and is currently operating in accordance with Bennett’s recommendations, providing services covering its own chips and cloud services.

Can GPT-4 “disguise” as a human? Turing test results released

Link: https://news.miracleplus.com/share_link/11380

To answer this question, Turing designed an imitation game that indirectly provided the answer. The original design of the game involved two witnesses and an interrogator. The two witnesses, one human and the other artificial intelligence, aim to convince the interrogator that they are human through a text-only interactive interface. The game is inherently open-ended, as the interrogator can ask any question, whether it’s about romance or math. Turing believed that this property could provide a broad test of machine intelligence. The game later became known as the Turing Test, but there is ongoing debate about what exactly the test measures and which systems are capable of passing it. Large language models (LLM) represented by GPT-4 are simply designed for the Turing test! They can generate smooth and natural text and have reached human-level performance on many language-related tasks. In fact, many people are already speculating that GPT-4 may be able to pass the Turing test. Recently, researchers from the University of California, San Diego, Cameron Jones and Benjamin Bergen, released a research report providing the results of their empirical research on Turing tests for AI agents such as GPT-4.

GPT-4 has become dumber and worse, and its cache history was exposed. Reply: Tell a joke 800 times, even if you change it, you won’t listen to it.

Link: https://news.miracleplus.com/share_link/11381

Some netizens found another evidence that GPT-4 has become “stupid”. He questioned: OpenAI will cache historical responses and allow GPT-4 to directly retell previously generated answers. The most obvious example is telling jokes. Evidence shows that even when he adjusted the model’s temperature value higher, GPT-4 still repeated the same “scientists and atoms” answer. It’s that cold joke “Why don’t scientists trust atoms? Because everything is made up of them.” Here, it stands to reason that the larger the temperature value, the easier it is for the model to generate some unexpected words, and the same joke should not be repeated. More than that, even if we don’t change the parameters, change the wording, and emphasize that it tells a new and different joke, it won’t help.

Xiaobing CEO Li Di: Commercialization of large models is like minesweeper | REAL Technology Conference

Link: https://news.miracleplus.com/share_link/11382

Xiaobing CEO Li Di: Commercialization of large models is like a “minesweeper” process; Lin Yonghua, Vice President of Zhiyuan Research Institute: Practitioners need to create an open source ecosystem like “Linux for large models”; Wang Xiaochuan, CEO of Baichuan Intelligence: Intelligence is not logical reasoning, but an ability of abstraction and metaphor; artificial intelligence will bring about a “counter-industrial revolution”; excellent programmers are willing to be replaced by AI… This is the guest speech at the third REAL Technology Conference In addition to the wonderful speeches, more than 20 guests expressed their views on hot technological topics such as artificial intelligence. These guests included entrepreneurs, investors, technicians and university scholars. They talked about the development of artificial intelligence from different perspectives.

How fun is it to use FP8 to train large models? Microsoft: 64% faster than BF16, saving 42% memory

Link: https://news.miracleplus.com/share_link/11383

Large language models (LLM) have unprecedented language understanding and generation capabilities, but unlocking these advanced capabilities requires huge model scale and training calculations. In this context, especially when we focus on scaling to the scale of the Super Intelligence model proposed by OpenAI, low-precision training is one of the most effective and critical techniques. Its advantages include small memory footprint, training speed Fast and low communication overhead. Currently, most training frameworks (such as Megatron-LM, MetaSeq, and Colossal-AI) train LLM using FP32 full precision or FP16/BF16 mixed precision by default. But that’s still not pushing the envelope: with the release of Nvidia’s H100 GPU, FP8 is becoming the next generation of low-precision representation data types. Theoretically, compared to the current FP16/BF16 floating-point mixed precision training, FP8 can bring 2 times the speed increase, saving 50% – 75% of memory costs and 50% – 75% of communication costs. Despite this, support for FP8 training is currently limited. NVIDIA’s Transformer Engine (TE) only uses FP8 for GEMM calculations, which brings very limited end-to-end acceleration, memory and communication cost savings. But now Microsoft’s open source FP8-LM FP8 mixed precision framework has greatly solved this problem: the FP8-LM framework is highly optimized and uses the FP8 format throughout the forward and backward passes of training, which greatly reduces the system’s calculations and memory. and communication overhead.

Supporting secondary editing and importing Virtual Engine 5, Stable Diffusion evolves 3D generation functions

Link: https://news.miracleplus.com/share_link/11384

When it comes to large scale models, Stability AI’s Stable Diffusion, launched in 2022, is the leader, continuing to provide creative storytellers with the AI tools they need. However, this model is mainly used for 2D image generation. Today, Stability AI shows us more image enhancement capabilities, producing better-looking images that are also cheaper and faster. What’s more, there are now new tools that can handle any type of 3D content creation.

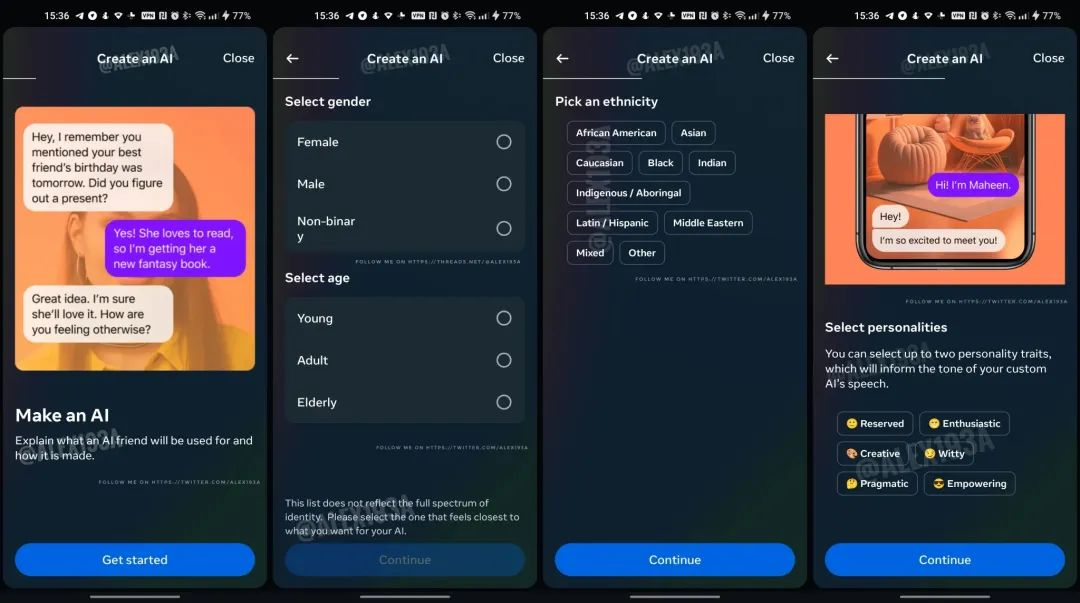

The Instagram version of “Character.ai” is coming

Link: https://news.miracleplus.com/share_link/11385

Last month, Meta launched 28 AI chatbots that can chat with users on Instagram, Messenger and WhatsApp, some of which are played by celebrities like Kendall Jenner, Snoop Dogg, Tom Brady and Naomi Osaka. Recently, according to screenshots shared by overseas researcher Alessandro Paluzzi, Instagram is developing a feature called “AI Friend”. Users can customize AI friends according to their own preferences and then have conversations with them; users can ask AI friends questions and discuss challenges. , brainstorm ideas and more.