Collection of Large Model Daily on February 21st

[Collection of Large Model Daily on February 21st] Learn to assemble a circuit board in 20 minutes! The precision control success rate of the open source SERL framework is 100%, and the speed is three times that of humans; Sora’s new video is only posted to TikTok: OpenAI has gained 100,000 fans in 4 days; Karpathy’s new video is popular again: building GPT Tokenizer from scratch; running LIama2 requires 84 million yuan, The cost estimation of the fastest AI inference chip has sparked heated discussions

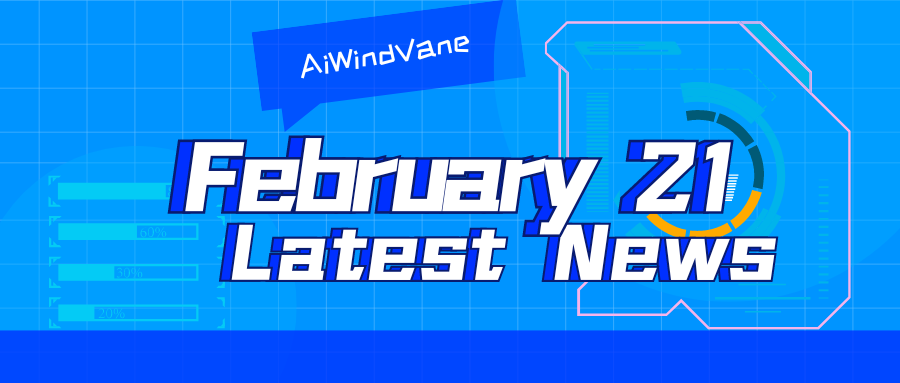

Learn to assemble a circuit board in 20 minutes! The open source SERL framework has a 100% precision control success rate and is three times faster than humans

Link: https://news.miracleplus.com/share_link/18873

In recent years, significant progress has been made in the field of robot reinforcement learning technology, such as quadruped walking, grasping, dexterous manipulation, etc., but most of them are limited to the laboratory demonstration stage. Widely applying robot reinforcement learning technology to actual production environments still faces many challenges, which to a certain extent limits its application scope in real scenarios. In the process of practical application of reinforcement learning technology, it is necessary to overcome multiple complex problems including reward mechanism setting, environment reset, sample efficiency improvement, and action safety guarantee. Industry experts emphasize that solving the many problems in the actual implementation of reinforcement learning technology is as important as the continuous innovation of the algorithm itself. Faced with this challenge, scholars from the University of California, Berkeley, Stanford University, the University of Washington, and Google jointly developed an open source software framework called the Efficient Robot Reinforcement Learning Kit (SERL), dedicated to promoting the application of reinforcement learning technology in actual robots. of widespread use.

The first large-scale multi-language evaluation, supporting 7 languages, 7B open source LLM in the biomedical field

Link: https://news.miracleplus.com/share_link/18874

Large Language Models (LLMs) have been applied in professional fields such as healthcare and medicine. Although there are various open source LLMs tailored for health environments, there are still significant challenges in applying generic LLMs to the medical field. Recently, research teams from Avignon Université, Nantes Université and Zenidoc in France developed BioMistral, an open source LLM tailored for the biomedical field, using Mistral as its basic model and in Further pretraining was performed on PubMed Central. The researchers comprehensively evaluated BioMistral against a benchmark consisting of 10 established English-language medical question answering (QA) tasks. Lightweight models obtained through quantization and model merging methods are also explored. The results demonstrate BioMistral’s superior performance compared to existing open source medical models and its competitive advantage compared to proprietary models. Finally, to address the issue of limited data outside of English and to assess the multilingual generalization ability of medical LLM, the benchmark was automatically translated and evaluated into 7 other languages. This marks the first large-scale multilingual evaluation of LLM in medicine.

OPPO Liu Zuohu: In 10 years, mobile phones will still be the best carrier of AI

Link: https://news.miracleplus.com/share_link/18875

How should mobile phone AI functions be implemented? Will the experience of the most important smart device of the past era be upgraded in the AI era, or will it be replaced by new devices? A few days ago, Meizu announced that it would stop the development of new traditional “smartphone” projects and devote all its efforts to a new generation of AI devices, which caused a small-scale heated discussion. On February 20, as if in response, OPPO, one of the most active mobile phone manufacturers in deploying AI functions to mobile phones, announced its AI strategy. OPPO has been laying out AI functions for mobile phones very early. In 2020, OPPO launched its first large AI model. In 2023, a year when large language models are booming, OPPO has determined its layout of AI mobile phones. In early 2024, OPPO released Find X7, which is the first mobile phone that can apply a 7 billion parameter large language model on the device side.

Sora’s new video is only posted on TikTok: OpenAI has gained 100,000 followers in 4 days

Link: https://news.miracleplus.com/share_link/18876

Sora’s new video has become “TikTok exclusive”. Quietly, OpenAI officially entered TikTok, and with the brainwashing soundtrack, it made people unable to stop. It attracted fans like crazy: in just 4 days, it gained 100,000 followers and 500,000 likes – this is still no shooting or publicity. in the case of. A16z partner exclaimed, if this was posted in the information flow, there would be absolutely no way to tell the truth from the false.

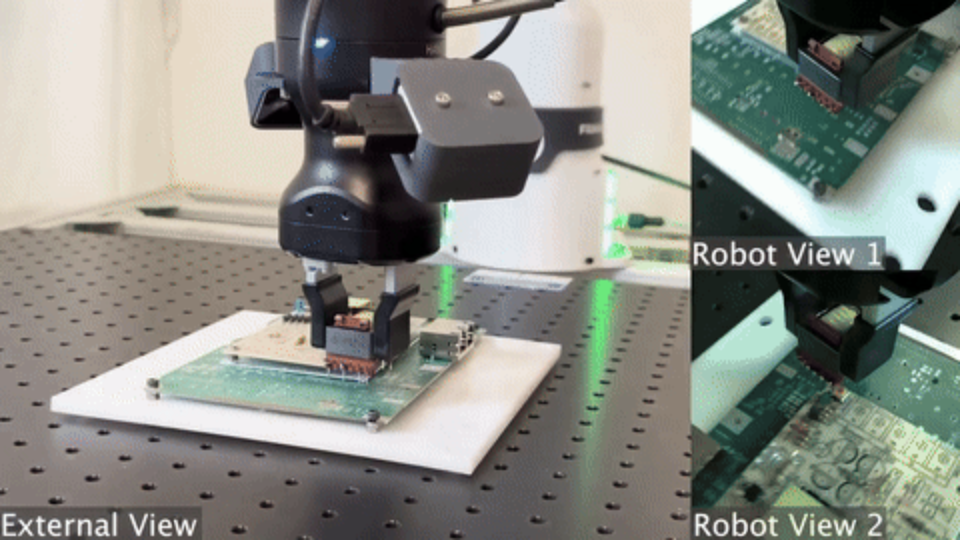

Karpathy’s new video goes viral again: Building GPT Tokenizer from scratch

Link: https://news.miracleplus.com/share_link/18877

After technology guru Kapasi left OpenAI, business has been quite active. No, the new project on the front foot has just been launched, and a new teaching video on the back foot has been released for everyone: this time, it teaches us step by step how to build a GPT Tokenizer (word segmenter), and it is still a familiar length (a full 2 hours and 13 minutes). Tokenizer is a completely independent stage in the large language model pipeline. They have their own training set and algorithm (such as BPE, byte pair encoding), and implement two functions after training is completed: encoding from string to token, and decoding from token back to string. Why do we need to pay attention to it? Kapasi pointed out: Because many strange behaviors and problems in LLM can be traced back to it.

It costs 84 million yuan to run LIama2, and the cost estimation of the fastest AI inference chip has attracted heated discussion

Link: https://news.miracleplus.com/share_link/18878

To achieve the fastest large model inference in history, it will cost US$11.71 million (84.1 million yuan). For the same project, the cost of using NVIDIA GPU is only US$300,000. Regarding the change of ownership of the most powerful AI chip to Groq, we may have to let the bullets fly for a while longer. In the past two days, Groq has made a stunning debut. It uses a chip that is said to be “100 times more cost-effective than NVIDIA” and can generate large models of 500 tokens per second without feeling any delay. After the uproar, some rational discussions began to emerge, mainly regarding the benefits and costs of Groq. According to a rough calculation by netizens, the demo now requires 568 chips and costs US$11.71 million. Participating in the discussion on Groq’s cost issues were computer science students, cloud vendors that also provide inference services, and even former Groq employees fighting against current employees.

Aiming to build the first general biological AI model, former Google DeepMind scientists join forces to create Biooptimus

Link: https://news.miracleplus.com/share_link/18879

As the French startup ecosystem continues to thrive, companies like Mistral, Poolside, and Adaptive. Paris-based Biooptimus emerged from stealth on February 20 with a $35 million seed round on its mission to build the first general artificial intelligence foundational model for biology. New open science models will connect biology with generative AI at different scales – from molecules to cells, tissues and whole organisms. Bioptimus has joined forces with a team of Google DeepMind alumni and scientists from Owkin, the AI biotech startup itself a French unicorn, to leverage AWS compute and Owkin’s data generation capabilities and access to data from leading academic hospitals around the world. Multimodal patient data.