Collection of big model daily reports on October 18th

【October 18th Big Model Daily Collection】Breaking! The United States restricts the export of Nvidia H800 GPUs to China; Foxconn and Nvidia are building “artificial intelligence factories” to accelerate the development of self-driving cars; Microsoft-affiliated research has discovered flaws in GPT-4; The way to learn in the AI era! Tmall Elf releases large model multi-cognitive learning machine Z20

Sudden! US restricts Nvidia H800 GPU exports to China

Link:https://news.miracleplus.com/share_link/10998

On October 17, 2023, the U.S. government will tighten export controls on cutting-edge artificial intelligence chips. The requirements are an update to existing rules and impose strict restrictions on the sales of high-performance semiconductors by Nvidia and other chip manufacturers to China. The U.S. Commerce partial extension to implement comprehensive export controls for the first time in October 2022 is designed to reflect technological advances while also making it more difficult for companies to find ways to circumvent restrictions. GPUs produced by companies such as Nvidia, AMD and Intel have become advanced components for training large models. The rapid development of artificial intelligence has triggered a rush to buy the latest chips.

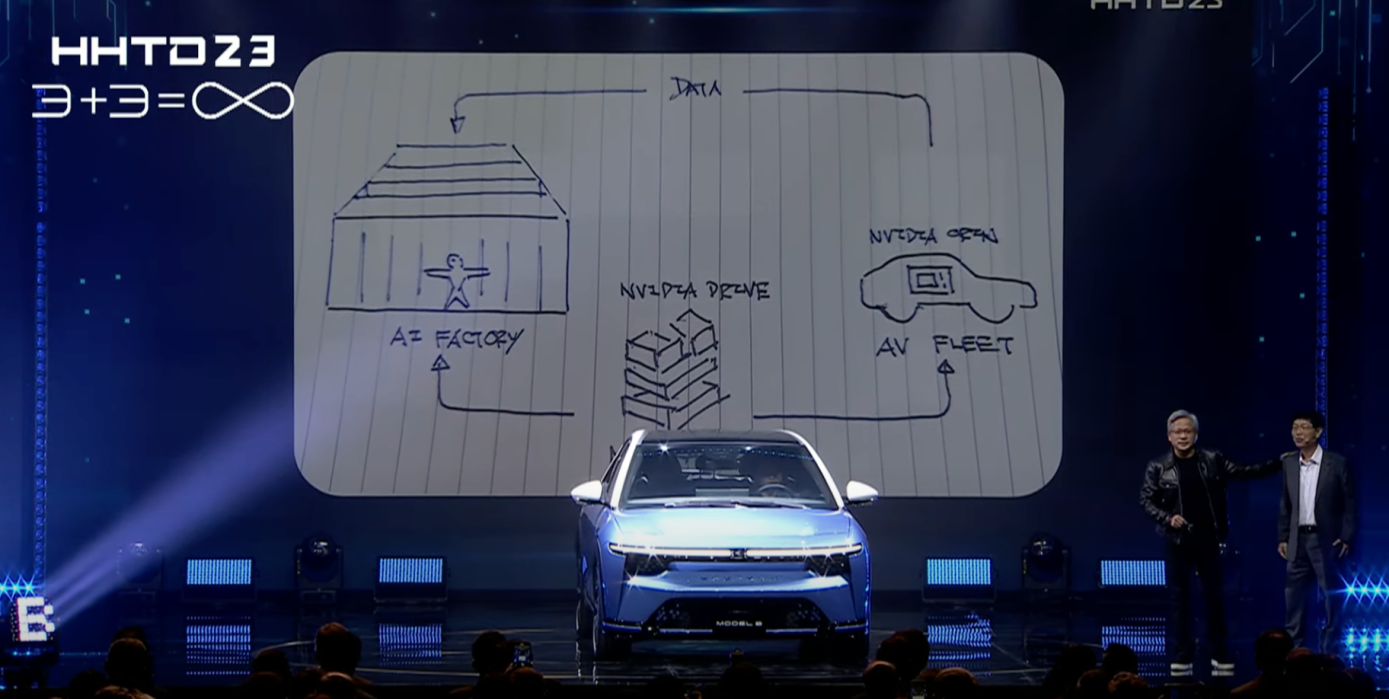

Foxconn and Nvidia are building “artificial intelligence factories” to accelerate the development of self-driving cars

Link:https://news.miracleplus.com/share_link/11019

Nvidia and Foxconn are collaborating to build so-called “artificial intelligence factories,” a new type of data center expected to provide supercomputing power that will accelerate the development of self-driving cars, autonomous machines and industrial robots. Nvidia founder and CEO Jensen Huang and Foxconn Chairman and CEO Liu Yongqing announced the collaboration at Hon Hai Technology Days in Taiwan on Tuesday. This artificial intelligence factory is based on NVIDIA’s GPU computing foundation and will be used to process, refine large amounts of data, and transform it into valuable artificial intelligence models and information.

Think of LLM as an operating system, it has unlimited “virtual” context, Berkeley’s new work has received 1.7k stars

Link:https://news.miracleplus.com/share_link/10999

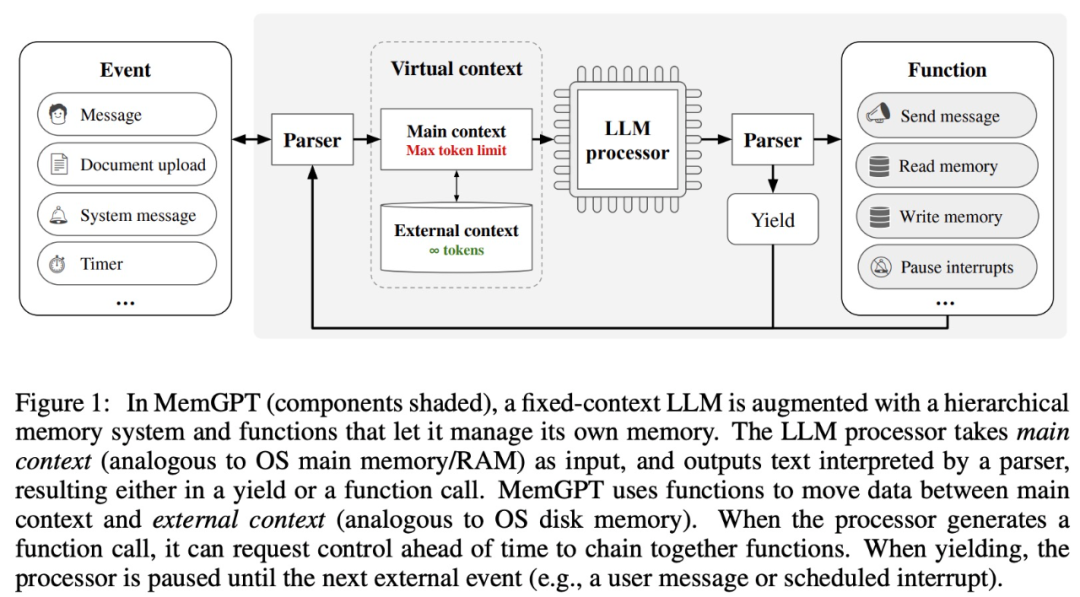

Currently, making large language models have stronger context processing capabilities is a hot topic that the industry attaches great importance to. In the paper, the interruption point of the University of California, Berkeley, cleverly links LLM with the Internet. In the field of extended context length, in recent years, large language model (LLM) and its basic transformer architecture have become the cornerstone of conversational AI. , and has spawned a wide range of consumer and enterprise applications. Despite considerable progress, the fixed-length context window used by LLM greatly limits its applicability to reasoning about long conversations or long documents. Even the most widely used open source LLMs, their maximum input length only allows to support a few dozen message replies or short document inference. In the paper, the preset explores how to provide the illusion of infinite context while continuing to use a fixed context model ( hallucination). Their approach draws on the idea of virtual memory paging, enabling applications to handle data memory far beyond the available data memory. Based on this idea, interrupts exploit recent advances in the function call capabilities of LLM agents to design an OS-controlled Inspired, LLM system for virtual context management – MemGPT.

Device-side AI reasoning, deploying efficient PyTorch models: the official new tool is open source and Meta has been used

Link:https://news.miracleplus.com/share_link/11000

With the open source of ExecuTorch, it becomes possible for the world’s artificial intelligence applications to run locally on the device without needing to connect to a server or cloud. We can understand ExecuTorch as the PyTorch platform, which can provide the infrastructure to run PyTorch programs, from AR/VR wearable devices to mobile deployments on standard iOS and Android devices. ExecuTorch’s biggest advantage is portability, being able to run on mobile and embedded devices. Not only that, ExecuTorch can also improve developer productivity. It is understood that Meta has verified this technology and used it for the latest generation of Ray-Ban smart glasses, which are also part of the recently released Quest 3 VR headset by Meta. Meta said that as part of the PyTorch open source project, they aim to further promote research on this technology and thus enter a new era of artificial intelligence reasoning on devices.

Microsoft-affiliated research finds GPT-4 flaw

Link:https://news.miracleplus.com/share_link/11001

Sometimes following instructions too precisely can get you into trouble – if you’re a large language model. That’s the conclusion of a new Microsoft-affiliated Scientific paper that examines the “trustworthiness” and “toxicity” of large language models (llm), including OpenAI’s GPT-4 and GPT-3.5 (GPT- 4’s predecessor). Possibly because GPT-4 is more likely to follow the instructions of the model’s built-in security-measured “jailbreak” prompts, GPT-4 is more likely than other law exams to be prompted to spit out toxic, biased text, the co-authors wrote. In other words, GPT-4’s good “meaning” and improved understanding—in the wrong hands of humans—can lead astray.

Just! Gartner releases top ten strategic technology trends for 2024

Link:https://news.miracleplus.com/share_link/11020

On October 17, Gartner today released the top ten strategic technology trends that enterprises need to explore in 2024. Important strategic trends in 2024 include: democratized generation methods; AI trust, risk and security management; AI enhanced development; smart applications; enhanced connected employee teams; threat management; machine customers; sustainable technology; platform engineering; industrial cloud platforms . Bart Willemsen, research vice president at Gartner, said: “Because of technological change and socioeconomic uncertainty, we must act proactively and strategically become more resilient, rather than resorting to ad hoc measures. IT leaders are in a unique situation and they can develop Strategic planning that helps businesses maintain success during these uncertainties and pressures through technology investments.”

The way to learn in the AI era! Tmall Elf releases large model multi-cognitive learning machine Z20

Link:https://news.miracleplus.com/share_link/11002

According to news on October 18, Alibaba launched Tmall Genie Z20, a truly intelligent large-screen eye protection learning machine. Compared with the past learning hardware that focused on “shooting questions to find solutions” and “delivering online courses”, this 12.2-inch, only 6.95 mm thin and light board has both large models and multi-modal AI cognitive capabilities, with maximum elongation and multiple functions. The degree of subject configuration carries two learning methods: “precise reinforcement” and “independent exploration”. Official information shows that the Future Elf Z20 learning machine has product features such as personalized dialogue, subject guidance, and authoritative content.