October 19th Big Model Daily Collection

[October 19th Big Model Daily Collection] Transformer is a multi-modal game! Academic charts can also be understood, with 100 millisecond ultra-fast response | Free trial; OpenAI new model development encounters setbacks, is sparsity the key to reducing the cost of large models; OpenAI image detection tool exposed, CTO: 99% of AI-generated images can be recognized out;

Transformer is a multi-modal game! You can also understand academic charts and respond quickly with 100 milliseconds | Free trial

Link:https://news.miracleplus.com/share_link/11024

Transformer and its team also brought a new work, a multi-modal large model Fuyu-8B with a scale of 8 billion parameters. Moreover, the release is open source, and the model weights can be seen on Hugging Face. This model has powerful image understanding capabilities. Photos, charts, PDFs, and interface UI are all included. Able to understand the relationship between various organisms in a complex food web.

OpenAI’s new model development has encountered setbacks. Is sparsity the key to reducing the cost of large models?

Link:https://news.miracleplus.com/share_link/11025

Late last year, as ChatGPT became a global sensation, engineers at OpenAI began working on a new artificial intelligence model, codenamed Arrakis. Arrakis is designed to allow OpenAI to run chatbots at a lower cost. But according to people familiar with the matter: In mid-2023, OpenAI had canceled the release of Arrakis because the model was not operating as efficiently as the company expected. The failure meant OpenAI lost valuable time and needed to divert resources to developing different models. Arrakis’ R&D program will be valuable to both companies as they negotiate a $10 billion investment and product deal. Arrakis’ failure disappointed some Microsoft executives, according to a Microsoft employee familiar with the matter. What’s more, Arrakis’s failure is a sign that the future of AI may be full of unpredictable pitfalls.

OpenAI image detection tool exposed, CTO: 99% of images generated by AI can be recognized

Link:https://news.miracleplus.com/share_link/11026

OpenAI is going to launch AI image recognition. The latest news is that their company is developing a detection tool. According to Chief Technology Officer Mira Murat, the tool is very accurate and has an accuracy rate of 99%. It is currently in the internal testing process and will be released to the public soon. I have to say that this accuracy rate is still a bit exciting. After all, OpenAI’s previous efforts in AI text detection ended in a miserable failure of “26% accuracy rate”.

Microsoft Azure OpenAI supports data fine-tuning! Can create exclusive ChatGPT

Link:https://news.miracleplus.com/share_link/11027

On October 17, Microsoft announced on its official website that it is now possible to fine-tune data for the GPT-3.5-Turbo, Babbage-002 and Davinci-002 models in the Azure OpenAI public preview. This allows developers to create unique ChatGPT using their own data sets. For example, through fine-tuning of massive medical data, a ChatGPT assistant focused on the medical field was built, which can query medical-related medical records, professional terminology, treatment plans and other content. At present, various industries around the world have accumulated huge amounts of high-quality data for several years or even decades. How to efficiently utilize and query this data has become a big problem. The AI assistant created through fine-tuning of its own data can effectively solve this pain point while improving the accuracy and security of the content. It is a powerful tool for organizations to reduce costs and increase efficiency.

Crazy 4k stars, AI plays Pokémon through reinforcement learning, and successfully won it after 20,000 games

Link:https://news.miracleplus.com/share_link/11028

“Pocket Monster” is the unofficial translation of “Pokémon”. From 1996 to the present, it can be divided into several generations and has become a classic in the hearts of many players. The style of the game is simple, but as a strategy game, the characters, attributes, tactics, systems, etc. included in it make it easy to get started but difficult to master. If an AI was trained to play Pokémon, how good do you think it would be? Twitter user @computerender used reinforcement learning to train an AI to play Pokémon. He also recorded this process through video, not only vividly showing the training process, but also introducing the methods in detail.

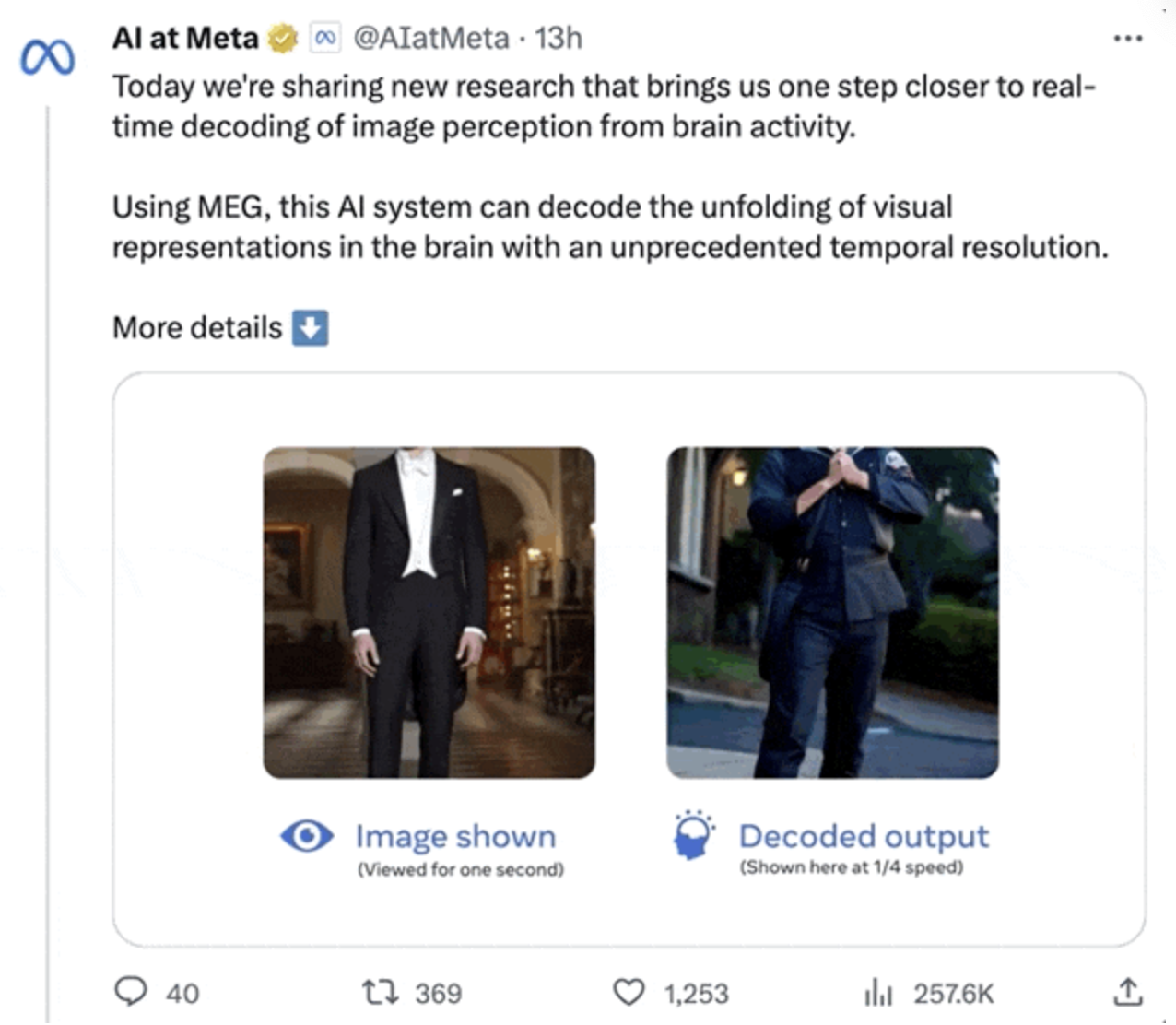

AI interprets brain signals in real time and restores key visual features of images at 7x speed, forwarded by LeCun

Link:https://news.miracleplus.com/share_link/11029

Now, AI can interpret brain signals in real time. This is not sensational, but a new study by Meta, which can guess the picture you are looking at in 0.5 seconds based on brain signals, and use AI to restore it in real time. Prior to this, although AI had been able to restore images from brain signals relatively accurately, there was still a bug – it was not fast enough. To this end, Meta has developed a new decoding model, which increases the speed of image retrieval by AI by 7 times. It can almost “instantly” read what people are looking at and make a rough guess.

All failed! Stanford’s 100-page paper ranks the transparency of large models, with GPT-4 only ranking third

Link:https://news.miracleplus.com/share_link/11030

Let me ask you, in the current fierce competition among hundreds of models, whose large model has the highest transparency? For example, information about how models are built, how they work, and how users use them. Now, this problem has finally been solved. Because Stanford University HAI and other research institutions recently jointly released a study – a specially designed scoring system called the Foundation Model Transparency Index (The Foundation Model Transparency Index). It ranks 10 major foreign major models from 100 dimensions, and conducts a comprehensive evaluation on the level of transparency.

What should I do if the big model always gets the “facts” wrong? Here is a review of more than 300 papers

Link:https://news.miracleplus.com/share_link/11031

Large models show great ability and potential in mastering factual knowledge, but they still have some problems, such as lack of domain knowledge, lack of real-time knowledge, possible hallucinations, etc., which greatly limits the application and application of large models. Dependability. Recently, some work has been conducted on the factuality of large models, but there is still no article that has completely sorted out the definition, impact, evaluation, analysis and enhancement of the factuality of large models. Westlake University and ten domestic and foreign scientific research institutions published a factual review of large models, “Survey on Factuality in Large Language Models: Knowledge, Retrieval and Domain-Specificity”. This review surveyed more than 300 documents and focused on the facts. The definition and impact of large model factuality, the assessment of large model factuality, the factual mechanism of large models and the principles of error generation, the enhancement of large model factuality, etc., were sorted out and summarized in detail on the factuality of large models. . The goal of this review is to help researchers and developers in academia and industry better understand the facts of large models and increase the knowledge level and reliability of the models.

IDC: Global generative AI spending will reach $143 billion by 2027

Link:https://news.miracleplus.com/share_link/11032

IDC, a world-renowned information research and consulting organization, announced a survey on its official website. By 2027, global spending on generative AI (Gen AI) will reach US$143 billion, with a five-year compound annual growth rate of 73.3%. This spending includes: software for generative AI as well as related infrastructure hardware and IT/business services. In 2023, global enterprises will invest nearly US$16 billion in generative AI solutions. IDC said the compound annual growth rate (CAGR) from 2023 to 2027 is 73.3%. This is more than twice the growth rate of overall AI spending and almost 13 times the compound annual growth rate of global IT spending during the same period.