January 2 Large Model Daily Collection

[January 2 Large Model Daily Collection] Is there no solution to the problem of large model illusion? The theory proves that calibrated LM will inevitably cause hallucinations; Huawei improves the Transformer architecture! Pangu-π solves the problem of feature defects and outperforms LLaMA at the same scale; Tsinghua University and Harvard University jointly developed a new AI system called LangSplat, which can efficiently and accurately search open words in three-dimensional space

The next stop for Vincent Video, Meta has started making videos

Link: https://news.miracleplus.com/share_link/14822

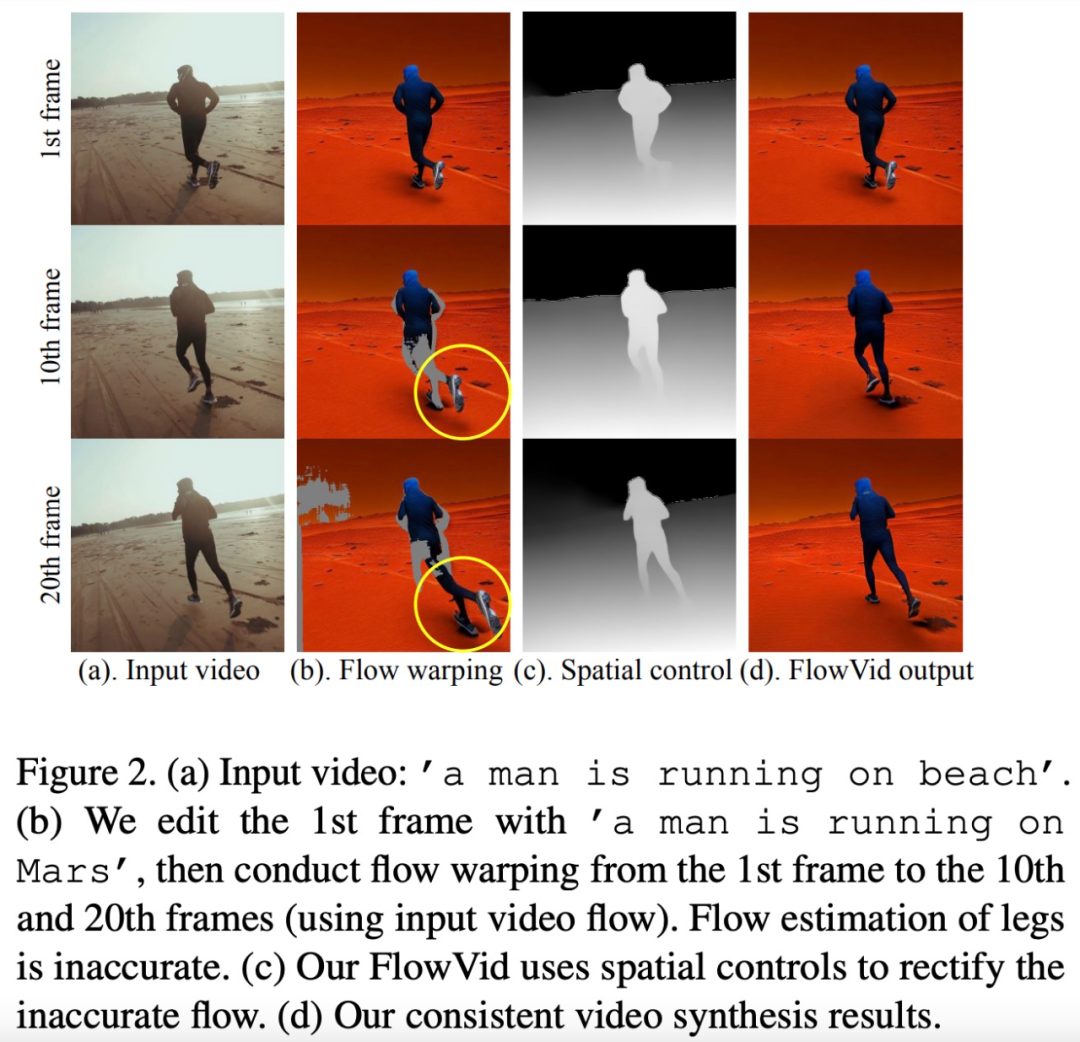

Text-guided video-to-video (V2V) synthesis has wide applications in various fields, such as short video creation as well as the wider film industry. Diffusion models have transformed image-to-image (I2I) synthesis, but face the challenge of maintaining temporal consistency between video frames in video-to-video (V2V) synthesis. Applying I2I models to video often results in pixel flickering between frames. In order to solve this problem, researchers from the University of Texas at Austin and Meta GenAI proposed a new V2V synthesis framework – FlowVid, which jointly utilizes the spatial conditions and temporal optical flow clues (clue) in the source video. Given an input video and a text prompt, FlowVid can synthesize a time-consistent video.

Generating high-definition textures for 3D assets, Tencent uses AI to expand game skins

Link: https://news.miracleplus.com/share_link/14823

Recently, Tencent announced the launch of a technology called Paint3D, which can generate high-resolution, unilluminated and diverse texture maps for texture-free 3D models based on text or image input, and texture-paint any 3D object.

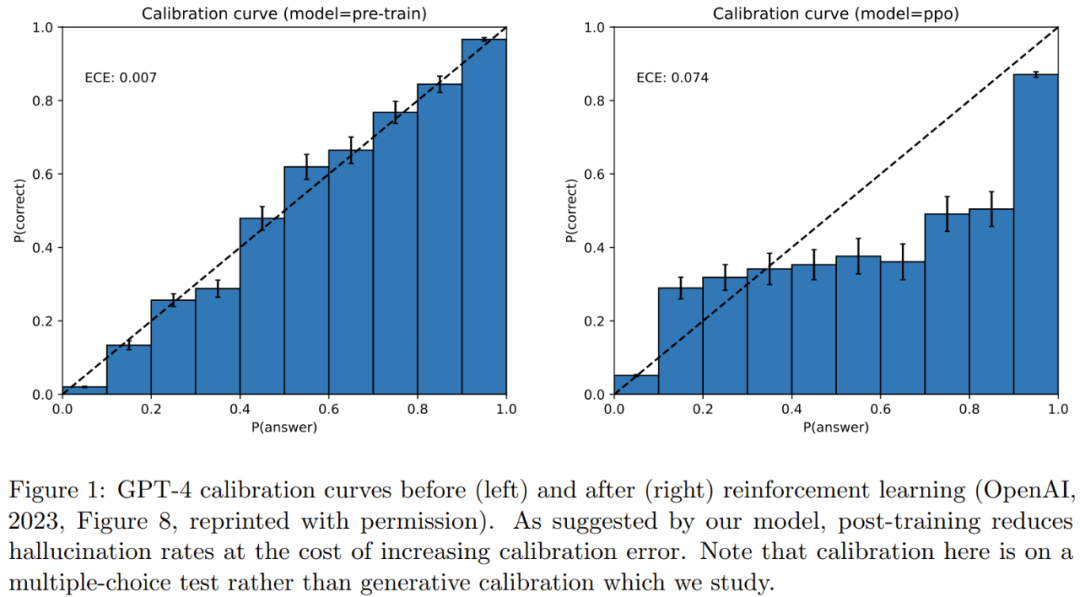

Is there no solution to the problem of large model illusion? The theory proves that calibrated LM will inevitably cause hallucinations

Link: https://news.miracleplus.com/share_link/14824

Although large language models (LLM) have demonstrated excellent capabilities in many downstream tasks, there are still some problems in their practical application. Among them, the “hallucination” problem of LLM is an important flaw. Hallucinations are responses generated by artificial intelligence algorithms that appear reasonable but are false or misleading. Since LLM exploded in popularity, researchers have struggled to analyze and mitigate the hallucination problem that makes it difficult to apply LLM broadly. Now, a new study concludes: “Calibrated language models must suffer from hallucinations.” The research paper is “Calibrated Language Models Must” recently published by Adam Tauman Kalai, senior researcher at Microsoft Research, and Santosh S. Vempala, professor at Georgia Institute of Technology. Hallucinate”. The paper shows that there is an inherent statistical reason why pretrained language models hallucinate certain types of facts, independent of the Transformer architecture or data quality.

Huawei improves Transformer architecture! Pangu-π solves the problem of feature defects, and its performance exceeds LLaMA at the same scale

Link: https://news.miracleplus.com/share_link/14825

Huawei’s Pangu series brings innovation at the architectural level. Huawei’s Noah’s Ark Laboratory and others jointly launched a new large language model architecture: Pangu-π. It improves on the traditional Transformer architecture by enhancing nonlinearity, which can significantly reduce the feature collapse problem. The direct effect is that the model output has stronger expressive ability. When trained with the same data, Pangu-π (7B) surpasses the LLaMA 2 equivalent large model in multi-tasks and can achieve 10% inference acceleration. It can reach SOTA on a 1B scale. At the same time, a large financial legal model “Yunshan” was developed based on this structure. This work is led by AI expert Tao Dacheng.

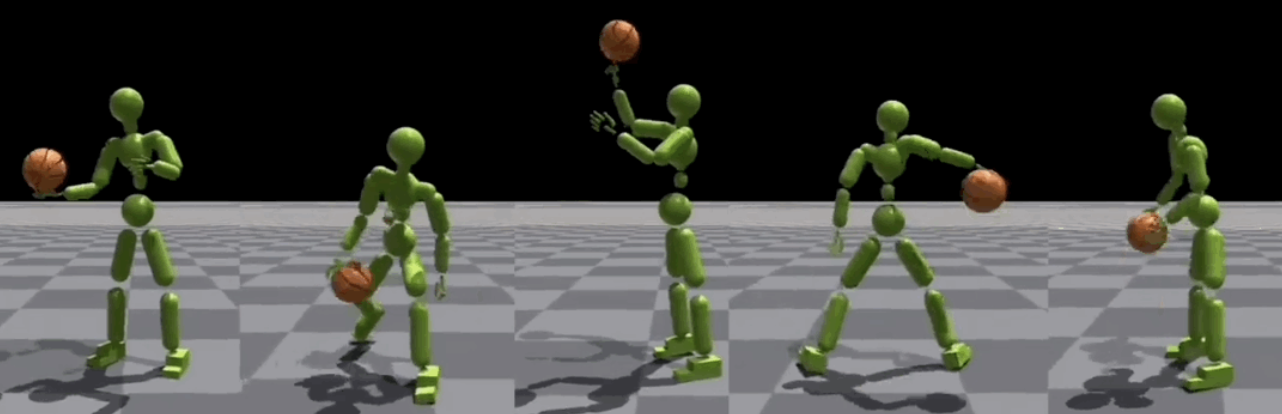

“Slam Dunk” simulates a humanoid robot, copying human basketball moves one to one. You can learn it after just one look, without the need for rewards for specific tasks.

Link: https://news.miracleplus.com/share_link/14826

A new recent study called PhysHOI enables physically simulated humanoid robots to learn and imitate these actions and techniques by watching demonstrations of human-object interaction (HOI). The point is that PhysHOI does not need to set a specific reward mechanism for each specific task, and the robot can learn and adapt autonomously. Moreover, there are a total of 51×3 independent control points on the robot’s body, so the simulation can be highly realistic.

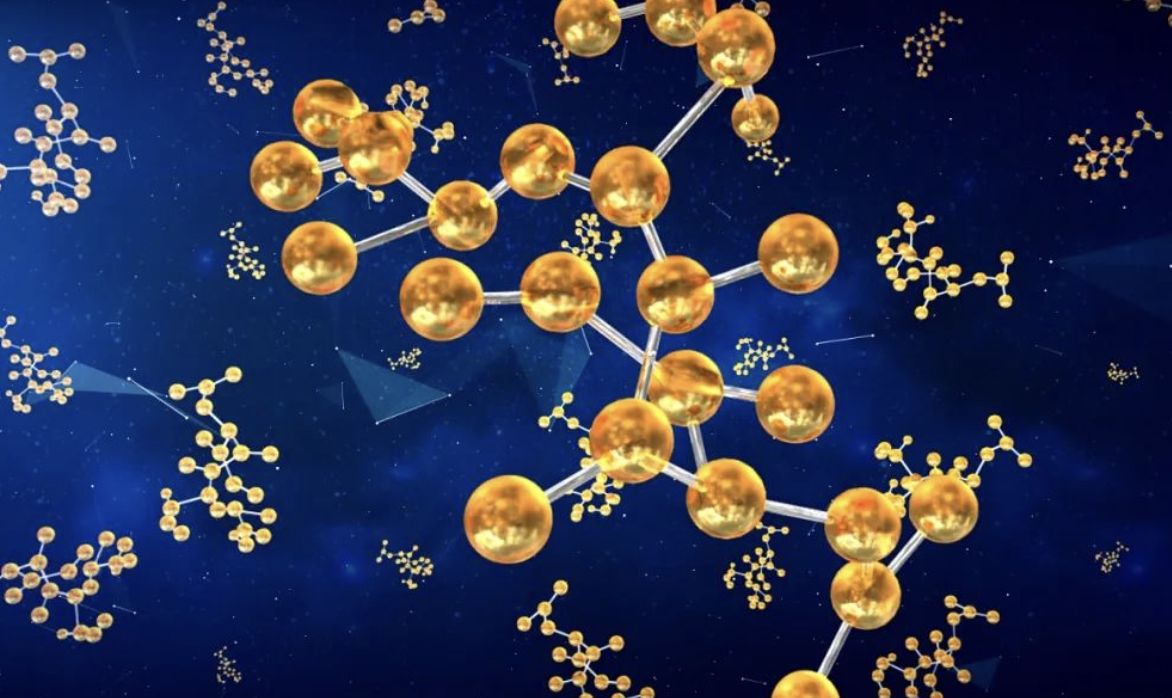

Discovered, synthesized and characterized 303 new molecules, the MIT team developed a machine learning-driven closed-loop autonomous molecular discovery platform

Link: https://news.miracleplus.com/share_link/14827

Traditionally, the molecular process of discovering desired properties has been driven by manual experimentation, the chemist’s intuition, and an understanding of mechanisms and first principles. As chemists increasingly use automated equipment and predictive synthesis algorithms, autonomous research facilities are getting closer. Recently, researchers from MIT have developed a closed-loop autonomous molecular discovery platform powered by integrated machine learning tools to accelerate the design of molecules with desired properties. Explore chemical space and exploit known chemical structures without manual experimentation. Across two case studies, the platform attempted more than 3000 reactions, more than 1000 of which resulted in predicted reaction products, and 303 unreported dye-like molecules were proposed, synthesized and characterized.

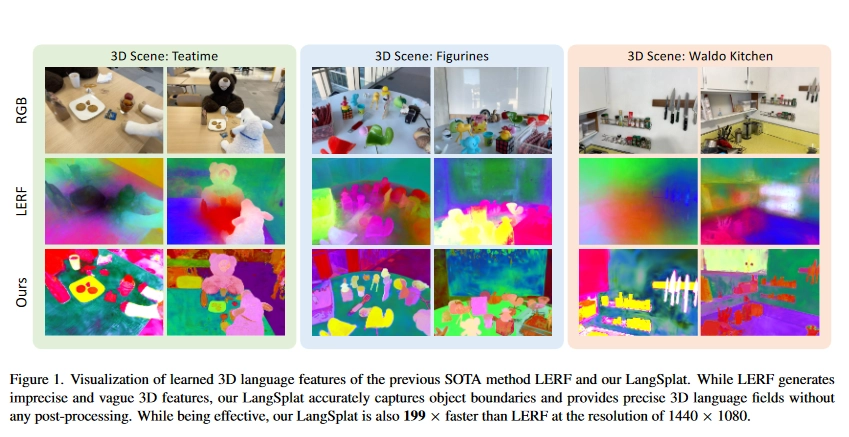

Tsinghua University and Harvard University jointly developed a new AI system called LangSplat, which can efficiently and accurately search open words in three-dimensional space.

Link: https://news.miracleplus.com/share_link/14828

LangSplat is the first 3DGS-based 3D language field method, specifically introducing SAM and CLIP, outperforming state-of-the-art methods on open vocabulary 3D object localization and semantic segmentation tasks while being 199 times faster than LERF. Researchers at the University of California, Berkeley, demonstrated the Language Embedded Radiation Field (LERF) in March 2023, embedding language embeddings from off-the-shelf models (such as CLIP) into NeRF, allowing for three-dimensional rendering without the need for specialized training. Accurately identify objects in the environment. For example, in a NeRF environment in a bookstore, users can search for specific book titles using natural language. The technology could also be used in robotics, visual training of simulated robots, and human interaction with the three-dimensional world.