New Year’s Day Summary (December 30th – January 1st) Large Model Daily Collection

[New Year’s Day Summary (December 30th – January 1st) Large Model Daily Collection] The problem of AI drawing model not being able to write was solved by Alibaba; basic model + robot: where has it been now; testing large language model biological reasoning ability, GPT-4, PaLM2, etc. are all tested

Here’s a new explanation why GPT-4 is dumb

Since its release, GPT-4, once considered the most powerful in the world, has also experienced multiple “crises of confidence.” If the “intermittent intelligence reduction” earlier this year is related to OpenAI’s redesign of the GPT-4 architecture, the rumors of “becoming lazy” some time ago are even more funny. Some people have tested that just telling GPT-4 “it is winter vacation” , it will become lazy, as if it has entered a state of hibernation. Large models become lazy and stupid, which specifically means that the model’s zero-sample performance on new tasks becomes worse. Although the above reasons sound interesting, how exactly is the problem solved? In a recent paper, researchers at the University of California, Santa Cruz discovered new findings that may explain the underlying reasons for GPT-4’s performance degradation.

Mamba can replace Transformer, but they can also be combined

Link: https://news.miracleplus.com/share_link/14737

Transformer is great, but not perfect, especially when it comes to handling long sequences. The state space model (SSM) performs quite well on long sequences. As early as last year, researchers proposed that SSM could be used to replace Transformer. But in fact, SSM and Transformer are not either-or architectures. They can be combined completely. A recently published NeurIPS 2023 paper “Block-State Transformers” adopts this approach. Not only can it easily support ultra-long inputs of 65k token length, but the calculation efficiency is also very high. Compared with the Transformer using loop units, the speed is sufficient. It can be improved by ten times! This paper was also praised by Mamba author Tri Dao, who said: “SSM and Transformer seem to complement each other.”

The problem of AI drawing model not being able to write was solved by Alibaba

Link: https://news.miracleplus.com/share_link/14738

The AI drawing tool that can accurately write Chinese characters is finally here! A total of four languages are supported, including Chinese, and the position of the text can be specified arbitrarily. From now on, people can finally say goodbye to the “ghost drawing symbols” of AI drawing models. This drawing tool called AnyText comes from Alibaba and can accurately add text to pictures according to specified positions. Previous drawing models were generally unable to accurately add text to pictures, and even if they could, it was difficult to support text with a complex structure like Chinese. At present, Anytext supports four languages: Chinese, English, Japanese and Korean. Not only are the fonts accurate, but the style can also be perfectly integrated with pictures.

A new version you haven’t seen before, the mathematical principles of Transformer are revealed

Link: https://news.miracleplus.com/share_link/14739

In 2017, “Attention is all you need” published by Vaswani et al. became an important milestone in the development of neural network architecture. The core contribution of this paper is the self-attention mechanism, which is the innovation that distinguishes Transformers from traditional architectures and plays an important role in its excellent practical performance. In fact, this innovation has become a key catalyst for advances in artificial intelligence in areas such as computer vision and natural language processing, while also playing a key role in the emergence of large language models. Therefore, understanding Transformers, and in particular the mechanisms by which self-attention processes data, is a crucial but largely understudied area.

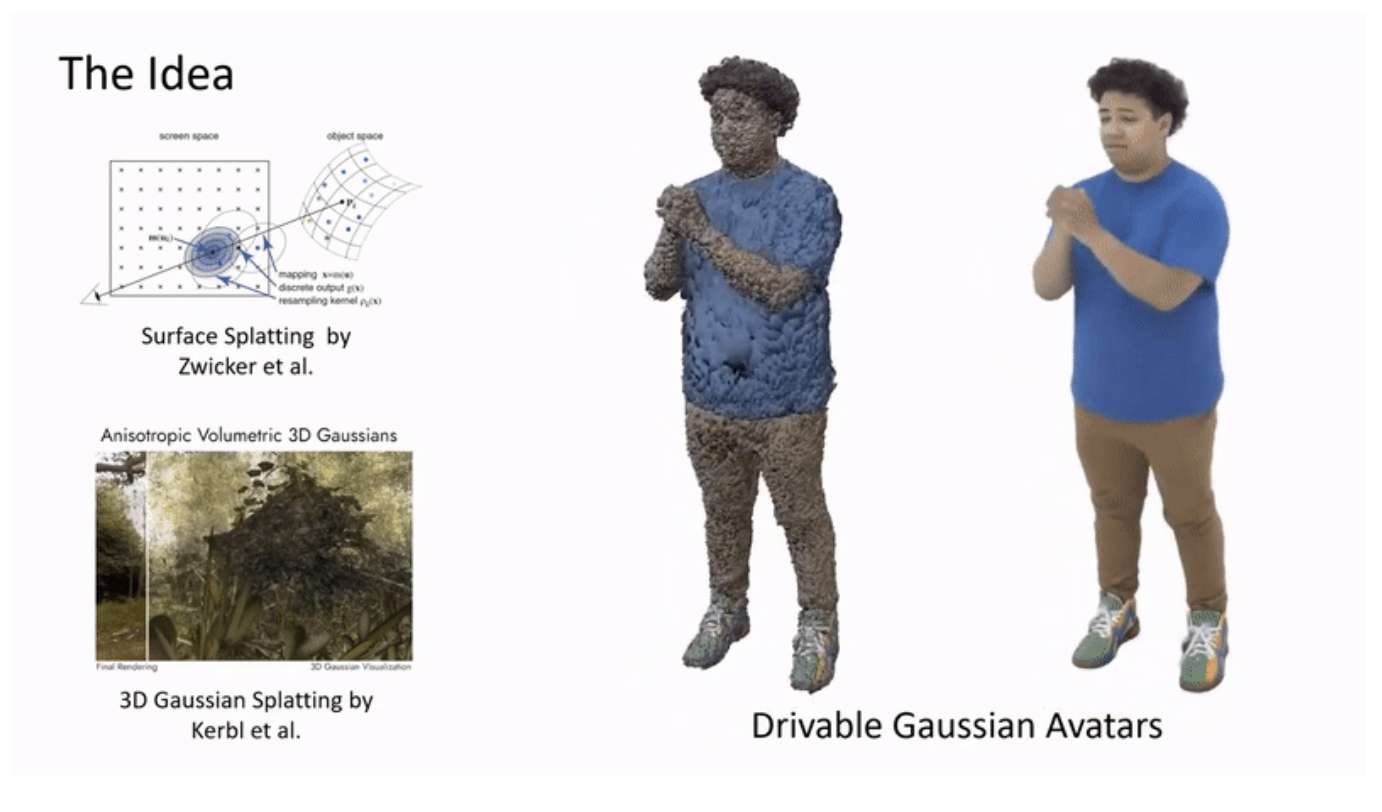

Can AI research also learn from Impressionism? These lifelike people are actually 3D models

Link: https://news.miracleplus.com/share_link/14740

Creating realistic and dynamic virtual characters that either require accurate 3D registration during training or dense input images during testing, or sometimes both, maybe D3GA is a good choice.

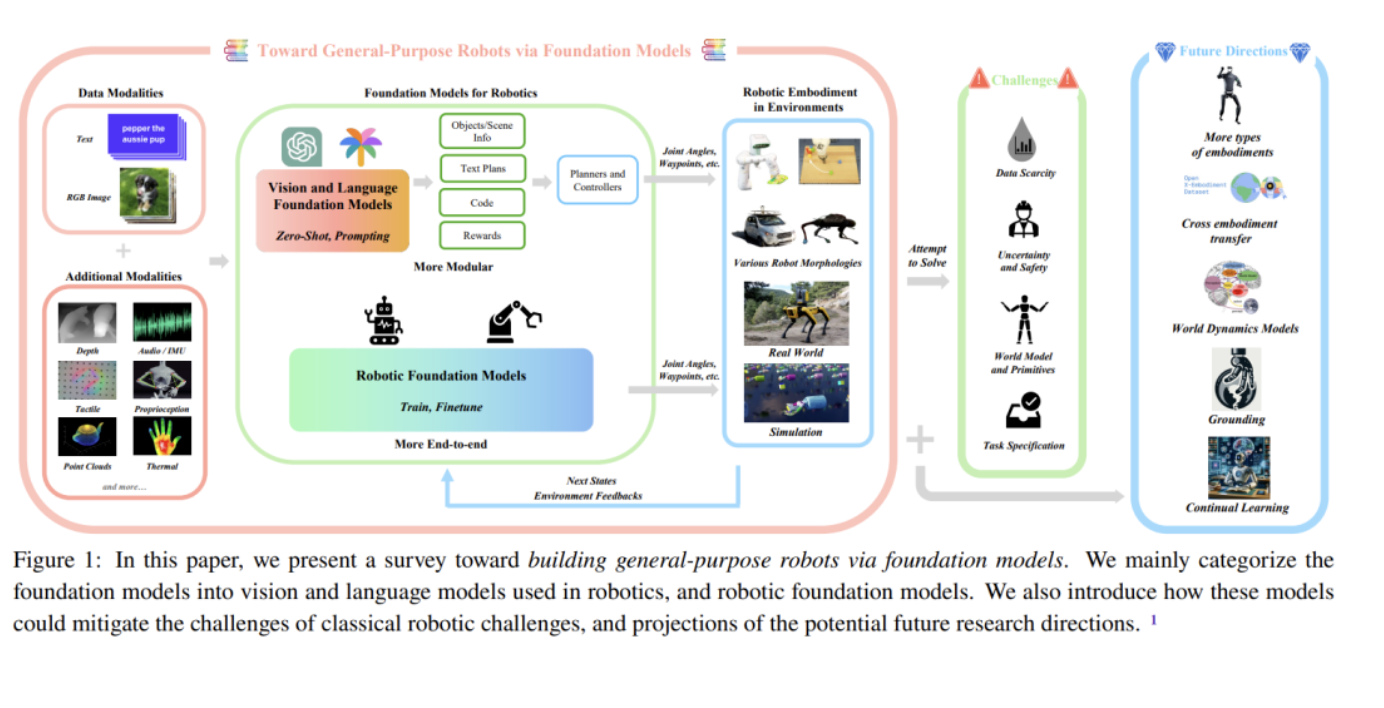

Basic model + robot: where are we now?

Link: https://news.miracleplus.com/share_link/14741

Robots are a technology with endless possibilities, especially when paired with smart technology. Large models that have created many transformative applications in recent times are expected to become the intelligent brains of robots, helping robots to perceive and understand the world and make decisions and plan. Recently, a joint team led by Yonatan Bisk of CMU and Fei Xia of Google DeepMind released a review report introducing the application and development of basic models in the field of robotics.

Test the biological reasoning ability of large language models, GPT-4, PaLM2, etc. are among the tests

Link: https://news.miracleplus.com/share_link/14742

Recent advances in large language models (LLMs) offer new opportunities to integrate artificial general intelligence (AGI) into biological research and education. In the latest study, researchers at the University of Georgia and the Mayo Clinic evaluated the ability of several leading LLMs, including GPT-4, GPT-3.5, PaLM2, Claude2, and SenseNova, to answer conceptual biology questions. The models were tested on a 108-question multiple-choice exam covering biological topics such as molecular biology, biotechnology, metabolic engineering, and synthetic biology. Among these models, GPT-4 achieved the highest mean score of 90 and showed the greatest consistency across trials with different cues. The results show that GPT-4 has logical reasoning capabilities and has the potential to aid biological research through functions such as data analysis, hypothesis generation, and knowledge integration. However, further development and validation are still needed for LLM to accelerate biological discovery.