August 6 Big Model Daily Collection

[August 6 Big Model Daily Collection] AI chip company Groq welcomes Yang Lekun to join, with a valuation of 20 billion RMB; Nvidia Cosmos was exposed to illegally grabbing a large amount of data

AI chip company Groq welcomes Yang Lekun to join, with a valuation of 20 billion RMB

Link: https://news.miracleplus.com/share_link/36020

Groq, an AI chip company founded by members of the Google TPU core team, recently announced the completion of a $640 million Series D financing round, led by BlackRock, and other investors include Cisco and Samsung’s venture capital institutions, bringing the company’s valuation to $2.8 billion. At the same time, Groq announced that it had invited Yang Lekun, an authority in the field of deep learning, to serve as a technical consultant. Since its inception, Groq has been focusing on the research and development of inference chips, and has shown high efficiency and rapid growth potential in the market. Despite facing a survival crisis in the past, Groq has seized the development opportunities of generative AI and transformed itself into a chip provider of the “world’s fastest reasoning”, and has established partnerships with many large companies such as Meta and Samsung. Groq’s LPU chip demonstrates computing power that exceeds GPU and CPU when processing large language models, and achieves efficient data processing through a single-core sequential instruction set computer architecture without HBM and CoWoS. Groq is expanding its TaaS product footprint and adding new models and features to its GroqCloud platform, attracting more than 360,000 developers. Currently, Groq is advancing the research and development and production of next-generation chips, and is working with wafer foundry GlobalFoundries to produce 4nm IPUs.

Related link: https://www.bloomberg.com/news/articles/2024-08-05/ai-startup-groq-gets-2-8-billion-valuation-in-new-funding-round

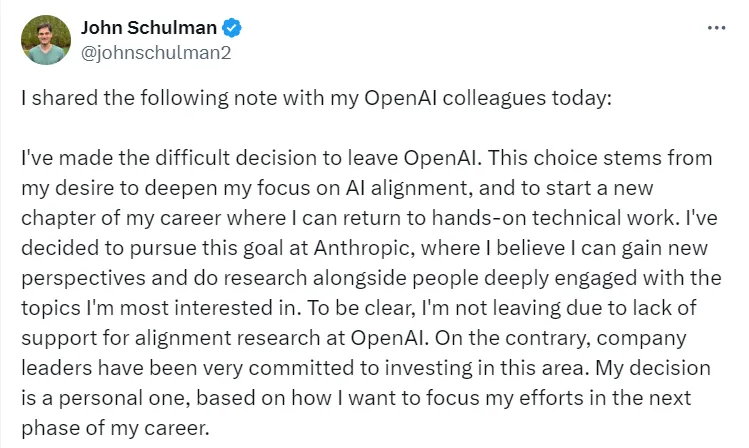

OpenAI executive changes, co-founder leaves

Link: https://news.miracleplus.com/share_link/36021

OpenAI has recently faced major executive changes. John Schulman, co-founder of OpenAI and head of post-training of ChatGPT, announced that he would leave OpenAI to join competitor Anthropic for technical research. In addition, Greg Brockman, another co-founder and president of OpenAI, decided to extend his leave until the end of the year, and product head Peter Deng has also left. These personnel changes occurred at a time when OpenAI’s business was growing rapidly and could face significant losses. OpenAI’s leadership has been in a state of instability since Sam Altman was fired and rehired in November last year. Currently, only Sam Altman, Wojciech Zaremba, and Greg Brockman, who is on long-term leave, are left of OpenAI’s 11 co-founders. OpenAI also recently hired its first chief financial officer and chief product officer, and moved security chief Aleksander Madry to other positions. The outside world has questioned the departure and leave of OpenAI executives, and netizens have widely discussed this on social media.

NVIDIA Cosmos was exposed to illegally grab a large amount of data

Link: https://news.miracleplus.com/share_link/36022

NVIDIA is developing a project called Cosmos, which has not yet been made public. The project involves crawling videos from platforms such as Netflix and YouTube to train AI models for NVIDIA’s Omniverse 3D world generator, self-driving car systems, and “digital human” products. NVIDIA described the amount of data it collected as “the amount of video in a lifetime of human beings.” Although the company claims that its actions are “fully compliant with the letter and spirit of copyright law,” internal discussions show that employees have concerns about legal issues with the use of datasets and YouTube videos compiled for academic research purposes. NVIDIA executives gave explicit approval for the use of these contents.

Link: https://www.404media.co/nvidia-ai-scraping-foundational-model-cosmos-project/

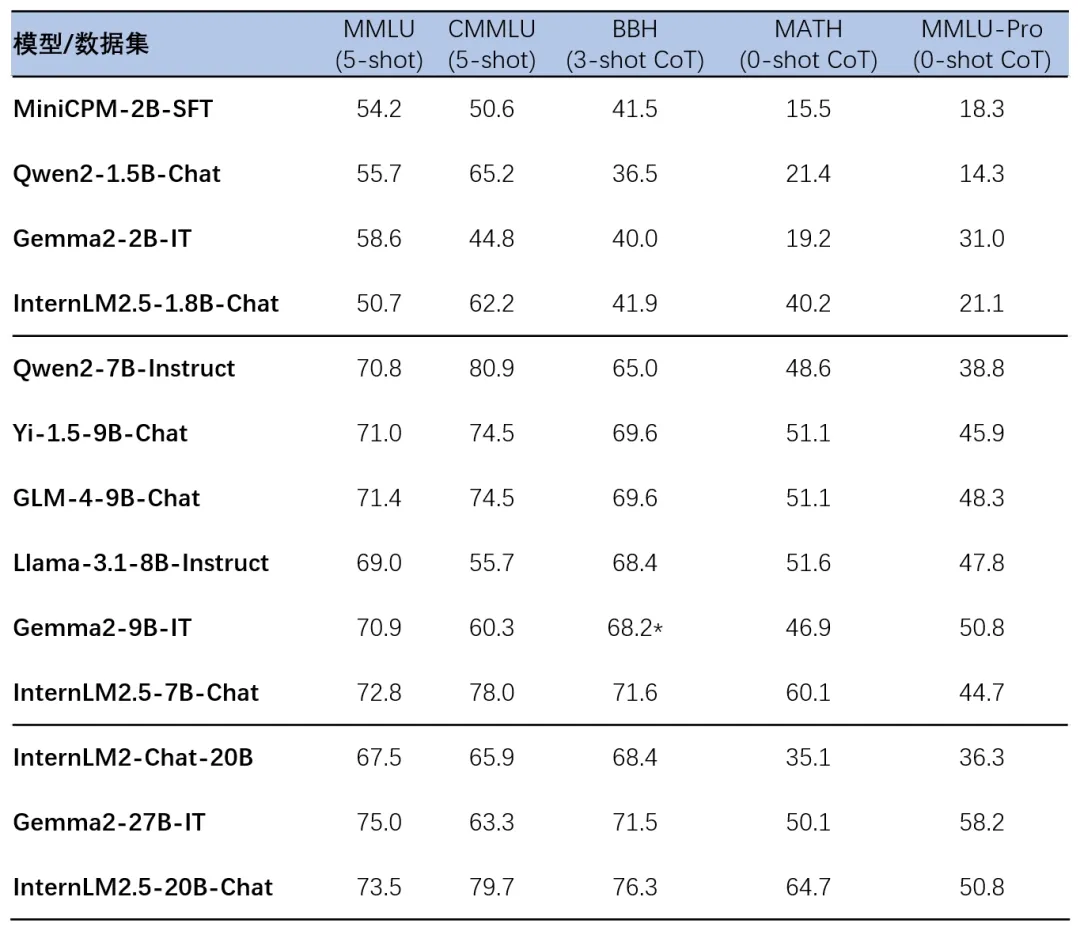

For diverse application needs, Shusheng·Puyu 2.5 is open source with ultra-lightweight, high-performance and multiple parameter versions

Link: https://news.miracleplus.com/share_link/36023

InternLM2.5 provides three parameter versions: 1.8B, 7B and 20B, targeting different application scenarios and developer needs. Among them, the accuracy of the InternLM2.5-20B model on the mathematics evaluation set MATH reached 64.7%, showing its strong ability in complex reasoning tasks. In addition, InternLM2.5 improves its performance in scenarios such as long document understanding and complex intelligent agent interaction through efficient pre-training and fine-tuning techniques. The open source model supports seamless integration with multiple reasoning and fine-tuning frameworks, such as XTuner, LMDeploy, vLLM, and Ollama, allowing developers to quickly deploy and fine-tune models while maintaining compatibility with the OpenAI service interface.

Announcement of the 2024 NeurIPS Workshop

Link: https://news.miracleplus.com/share_link/36024

The official blog of the NeurIPS 2024 workshop released a list of 56 accepted workshops, which will be held on December 14 and 15, 2024. The selected workshops cover a variety of fields from basic models, large language models, healthcare, and applications of machine learning in physical sciences, showing the diversity and depth of the field of machine learning. The workshop has begun soliciting papers and other contributions, and encourages the use of August 30 as the submission deadline.

Join us at OpenAI DevDay

Link: https://news.miracleplus.com/share_link/36025

OpenAI will host OpenAI DevDay in San Francisco, London, and Singapore in the fall of 2024. This is an event designed for developers to help builders connect with each other and gain insights into new AI features and products. The event includes hands-on technical workshops, panel discussions, product demonstrations, and developer project showcases to help participants improve their skills and explore new possibilities. The application deadline is August 15.