March 4th Large Model Daily Collection

[March 4th Large Model Daily Collection] CVPR 2024 full score paper: Zhejiang University proposed a new method of high-quality monocular dynamic reconstruction based on deformable three-dimensional Gaussian; the latest SOTA method in computational protein engineering, the Oxford team used codons to train large language models; 53 The PDF has been widely circulated, and core employees have resigned one after another. What secrets does OpenAI have?

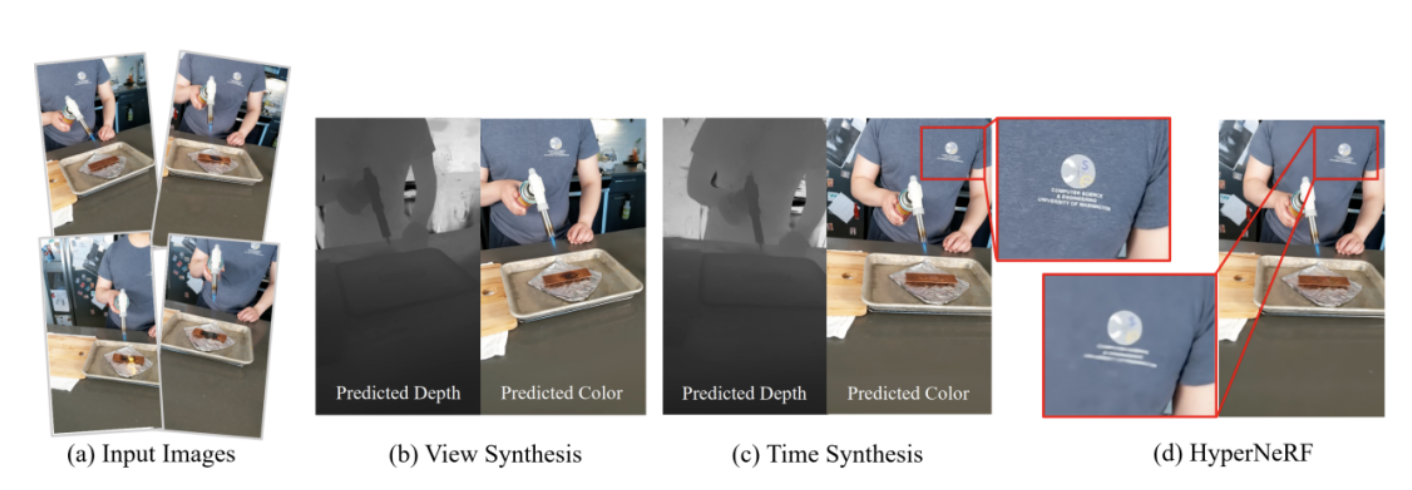

CVPR 2024 full score paper: Zhejiang University proposes a new method of high-quality monocular dynamic reconstruction based on deformable three-dimensional Gaussian

Link: https://news.miracleplus.com/share_link/20133

Monocular Dynamic Scene refers to a dynamic environment observed and analyzed using a monocular camera, in which objects in the scene can move freely. Monocular dynamic scene reconstruction is crucial for tasks such as understanding dynamic changes in the environment, predicting object motion trajectories, and generating dynamic digital assets. With the rise of neural rendering represented by Neural Radiance Field (NeRF), more and more work begins to use implicit representation for three-dimensional reconstruction of dynamic scenes. Although some representative works based on NeRF, such as D-NeRF, Nerfies, K-planes, etc., have achieved satisfactory rendering quality, they are still far away from true photo-realistic rendering. The research team from Zhejiang University and Bytedance believes that the root cause of the above problem is that the NeRF pipeline based on ray casting maps the observation space to the canonical space through backward-flow. ) cannot achieve accurate and clean mapping. Inverse mapping is not conducive to the convergence of learnable structures, so that current methods can only achieve PSNR rendering indicators of 30+ levels on the D-NeRF dataset.

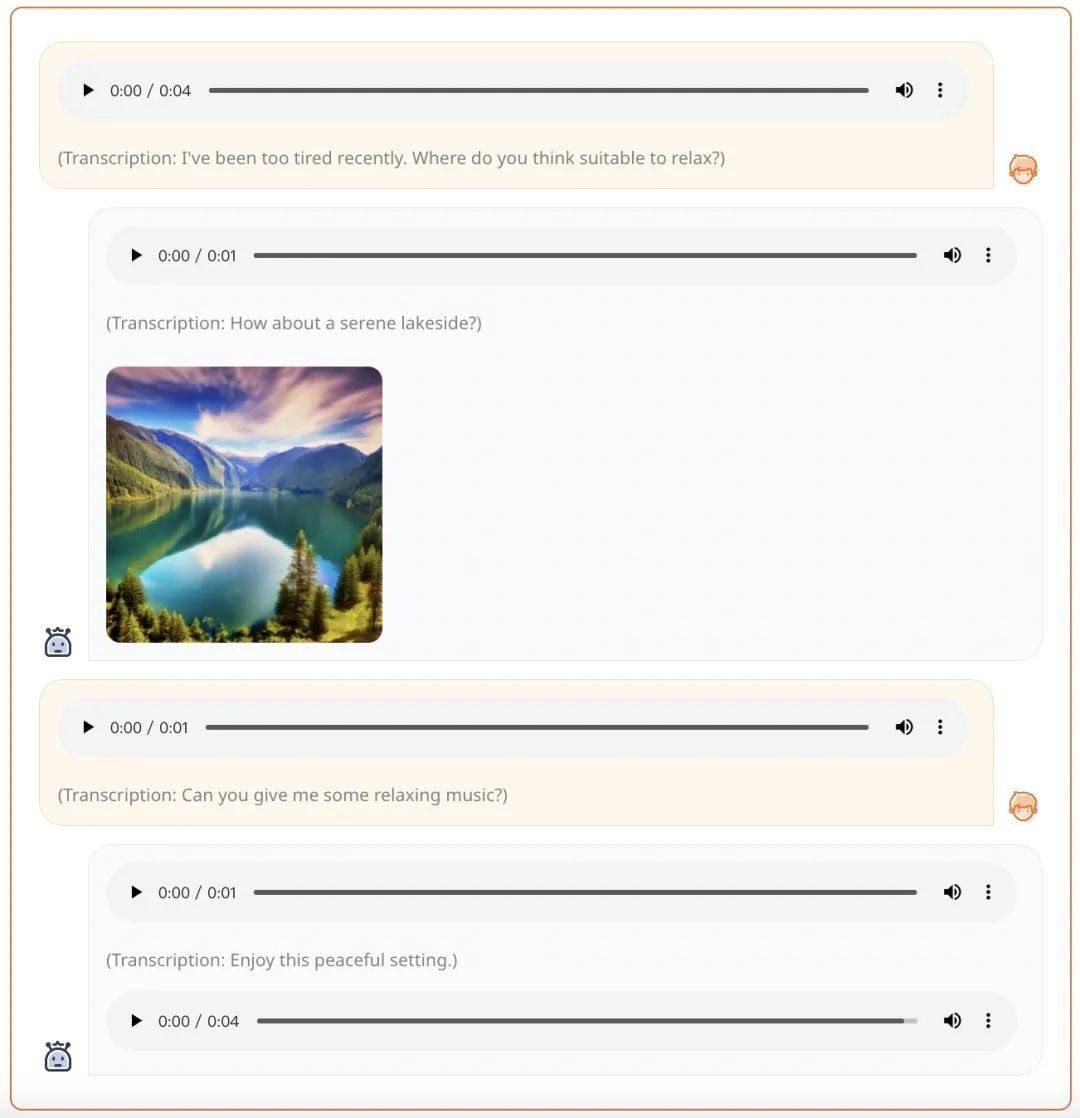

Fudan University and others released AnyGPT: any modal input and output, including images, music, text, and voice.

Link: https://news.miracleplus.com/share_link/20134

Recently, OpenAI’s video generation model Sora has become popular, and the multi-modal capabilities of generative AI models have once again attracted widespread attention. The real world is inherently multimodal, with organisms sensing and exchanging information through different channels, including vision, language, sound, and touch. One promising direction for developing multimodal systems is to enhance the multimodal perception capabilities of LLM, which mainly involves the integration of multimodal encoders with language models, thereby enabling them to process information across various modalities and leverage LLM’s text processing ability to produce a coherent response. However, this strategy is limited to text generation and does not include multi-modal output. Some pioneering works have made significant progress by enabling multimodal understanding and generation in language models, but these models only contain a single non-text modality, such as image or audio. In order to solve the above problems, Fudan University’s Qiu Xipeng team, together with researchers from Multimodal Art Projection (MAP) and Shanghai Artificial Intelligence Laboratory, proposed a multi-modal language model called AnyGPT, which can be understood in any combination of modalities. and reasoning about the content of various modalities. Specifically, AnyGPT can understand instructions intertwined with multiple modalities such as text, voice, images, music, etc., and can skillfully choose the appropriate multi-modal combination to respond.

Just one sentence to make the picture move. Apple uses large model animation to generate, and the result can be edited directly.

Link: https://news.miracleplus.com/share_link/20135

At this stage, the amazing innovative capabilities of large models continue to influence the creative field, especially the video generation technology represented by Sora, which is leading a new generation of trends. While everyone is shocked by Sora, perhaps Apple’s research is also worthy of everyone’s attention. In a study titled “Keyframer: Empowering Animation Design using Large Language Models”, researchers from Apple released Keyframer, a framework that can use LLM to generate animations. The framework allows users to create static 2D images using natural language prompts. animation.

The latest SOTA method for computational protein engineering, the Oxford team uses codons to train large language models

Link: https://news.miracleplus.com/share_link/20136

Protein representations from deep language models have demonstrated state-of-the-art performance in many tasks in computational protein engineering. In recent years, progress has mainly focused on parameter counting, with the capacity of models recently exceeding the size of the datasets they were trained on. Researchers at the University of Oxford propose an alternative direction. They demonstrate that large language models trained on codons rather than amino acid sequences can provide high-quality representations and outperform state-of-the-art models across a variety of tasks. In some tasks, such as species identification, protein and transcript abundance prediction, etc., the team found that language models trained based on codons outperformed all other published protein language models, including some containing more than 50 times more training parameters. Model.

The 53-page PDF has been widely circulated, and core employees have resigned one after another. What secrets does OpenAI have?

Link: https://news.miracleplus.com/share_link/20137

A 53-page PDF about “OpenAI Achieving AGI in 2027” is being widely circulated on the Internet. The document comes from an X account named “vancouver1717”, which was registered in July 2023 and has only two tweets. The newly released PDF document states that OpenAI “will develop human-level AGI by 2027”, “has been training a multi-modal model with 125 trillion parameters since August 2022”, and has “been training in December 2023.” “Completed training in months”, but “canceled release due to high inference costs”. It was mentioned that this model was GPT-5, which was originally planned to be released in 2025. After the cancellation, Gobi (GPT-4.5) was renamed GPT-5. The authenticity of the content is unknown, and people who have read it tend to “disbelieve” it because many judgments lack professionalism. However, this document also mentions the mysterious project Q* (pronounced Q-Star) that was exposed last year. It is said that the next stage of Q* was originally GPT-6, but it has been renamed GPT-7 (originally planned to be launched in 2026 release). In other words, the latest GPT-5 to be released is the original GPT-4.5, the real GPT-5 has been postponed to GPT-6, and GPT-6 has been postponed to GPT-7. But GPT-7 (Q*2025) has an IQ of up to 145, will be released before 2027, and will achieve comprehensive AGI. All these changes are actually related to Musk’s complaint.

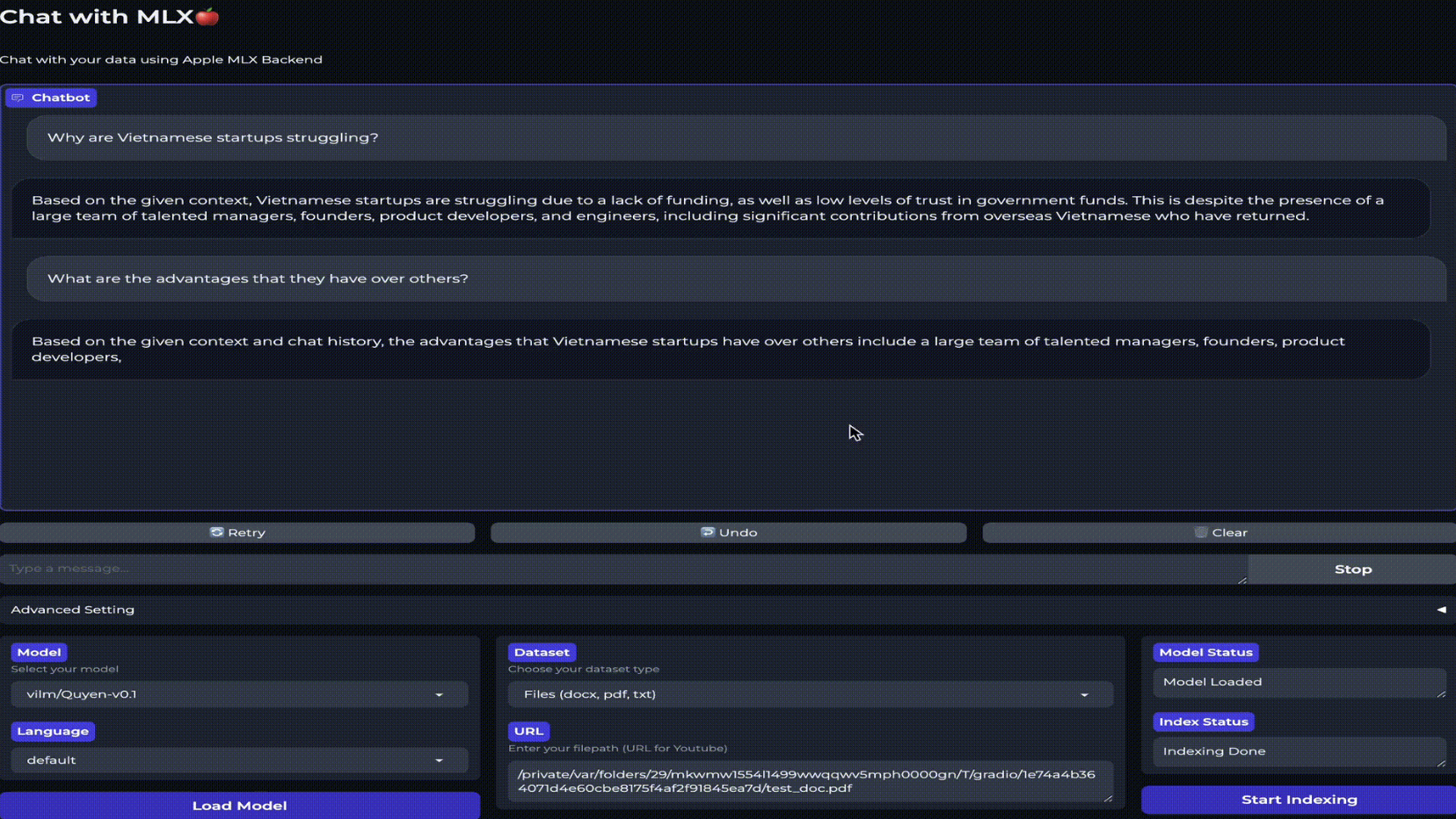

The Mac exclusive large model framework is here! Two lines of code deployment, can talk about local data, and also supports Chinese

Link: https://news.miracleplus.com/share_link/20138

Mac users, finally no longer have to envy N-card players for having exclusive large model Chat with RTX! The new framework launched by the master allows Apple computers to run local large models, and deployment can be completed with only two lines of code. Modeled after Chat with RTX, the name of the framework is Chat with MLX (MLX is Apple’s machine learning framework) and was built by a former OpenAI employee. The functions included in Academician Huang’s framework, such as local document summary and YouTube video analysis, are also available in Chat with MLX. There are a total of 11 available languages, including Chinese, and up to seven large open source models with built-in support. Users who have experienced it said that although the computing burden may be a bit greater for Apple devices, it is easy for novices to get started. Chat with MLX is really a good thing.

Rabbit CEO talks about Apple’s new AI moves and competition | Switching apps back and forth is terrible. R1 is a balance between cost and experience. 99% of startups will die!

Link: https://news.miracleplus.com/share_link/20140

This is Rabbit CEO Jesse Lyu’s latest conversation with TechCrunch reporters after CES. Jesse believes that in the digital age, simplifying user experience and improving efficiency are key. With the R1 device, he not only challenges the existing operating system and application ecosystem, but also leads a new way of human-computer interaction. Facing reporters about the competition among technology giants, Jesse Lyu said that the first lesson he learned from Y Combinator 10 years ago was that 99% of startups will die. A startup is undoubtedly betting on probability, and entrepreneurship is a survival game and it’s better to spend your time focusing on your own stuff instead of worrying about this or that…