January 29th Big Model Daily Collection

[January 29th Big Model Daily Collection] Baichuan Intelligent launches the new over 100 billion large model Baichuan 3, ranking list results: overtaking GPT-4 in several Chinese tasks; 500 lines of code to build a conversational search engine, Jia Yangqing was truly open source by Connotation’s Lepton Search It’s time to make a large MoE model from scratch, and a master-level tutorial is here

Login to Science, the speed and accuracy exceed that of human chemists, and it is more original. AI autonomous chemical synthesis robot accelerates chemical discovery

Link: https://news.miracleplus.com/share_link/17024

There has been an astonishing explosion of research in photochemistry and photocatalysis recently, driven in part by the environmentally benign nature of light as a reaction source. However, many studies demonstrate small-scale responses, and scaling up relies on a patchwork of different techniques that can require a lot of trial and error to optimize. In response to the need for efficient optimization of complex photocatalytic reaction conditions, Professor Timothy Noël’s team at the Van ‘t Hoff Institute of Molecular Science at the University of Amsterdam (UvA) in the Netherlands has developed an autonomous system that integrates artificial intelligence-driven machine learning units. Chemical synthesis robot. Dubbed “RoboChem,” the desktop device outperforms human chemists in speed and accuracy while also demonstrating a high level of ingenuity.

Inverse molecular design based on quantum-assisted deep learning

Link: https://news.miracleplus.com/share_link/17025

Professor Fengqi You’s team at Cornell University proposed a novel reverse molecular design framework by combining the advantages of quantum computing (QC) and generative AI. The framework leverages QC-assisted deep learning models to learn and simulate chemical space to predict and generate molecular structures with specific chemical properties. Generative AI plays a central role in this process, being able to learn underlying structure-property relationships from large amounts of molecular data and generate new molecular candidates that not only match preset properties but also take into account synthetic feasibility. The addition of quantum computing provides efficient computing power and optimization algorithms for this process, overcoming the performance bottleneck of traditional computers in processing large-scale chemical systems. Through this quantum-classical hybrid computing framework, researchers are able to perform efficient and effective molecular design in complex chemical space, opening up new avenues for the discovery of new molecules and the advancement of materials science.

Baichuan Intelligent launches Baichuan 3, a large-scale model worth over RMB 100 billion, ranking first: surpassing GPT-4 in several Chinese tasks

Link: https://news.miracleplus.com/share_link/17026

Baichuan Intelligent, which follows the monthly update route, accelerated sharply a year ago and turned into semi-monthly updates: the latest version of the large model Baichuan 3 with over 100 billion parameters was released, which is the third generation of Baichuan Intelligent’s basic model – just 20 days ago , this large model company founded by Wang Xiaochuan, has just released the large character model Baichuan-NPC. What is even more iconic is that Baichuan Intelligence’s model update focuses on demonstrating the model’s capabilities in medical scenarios.

500 lines of code to build a conversational search engine, Jia Yangqing was inspired by the Lepton Search open source

Link: https://news.miracleplus.com/share_link/17027

Here it comes, the Lepton Search open source code promised by Jia Yangqing is here. The day before yesterday, Jia Yangqing announced the Lepton Search open source project link on Twitter and said that anyone and any company can freely use the open source code. In other words, you can build your own conversational search engine with less than 500 lines of Python code. Today, Lepton Search is on the GitHub trending list again. In addition, some people have used this open source project to build their own web applications, and said that the quality is very high, on par with the AI-driven search engine Perplexity.

Meta’s official Prompt project guide: Llama 2 is more efficient when used this way

Link: https://news.miracleplus.com/share_link/17028

As large language model (LLM) technology matures, prompt engineering becomes more and more important. Several research organizations have published LLM prompt engineering guidelines, including Microsoft, OpenAI, and others. Recently, Meta, the creator of the Llama series of open source models, also released an interactive prompt engineering guide for Llama 2, covering quick engineering and best practices for Llama 2.

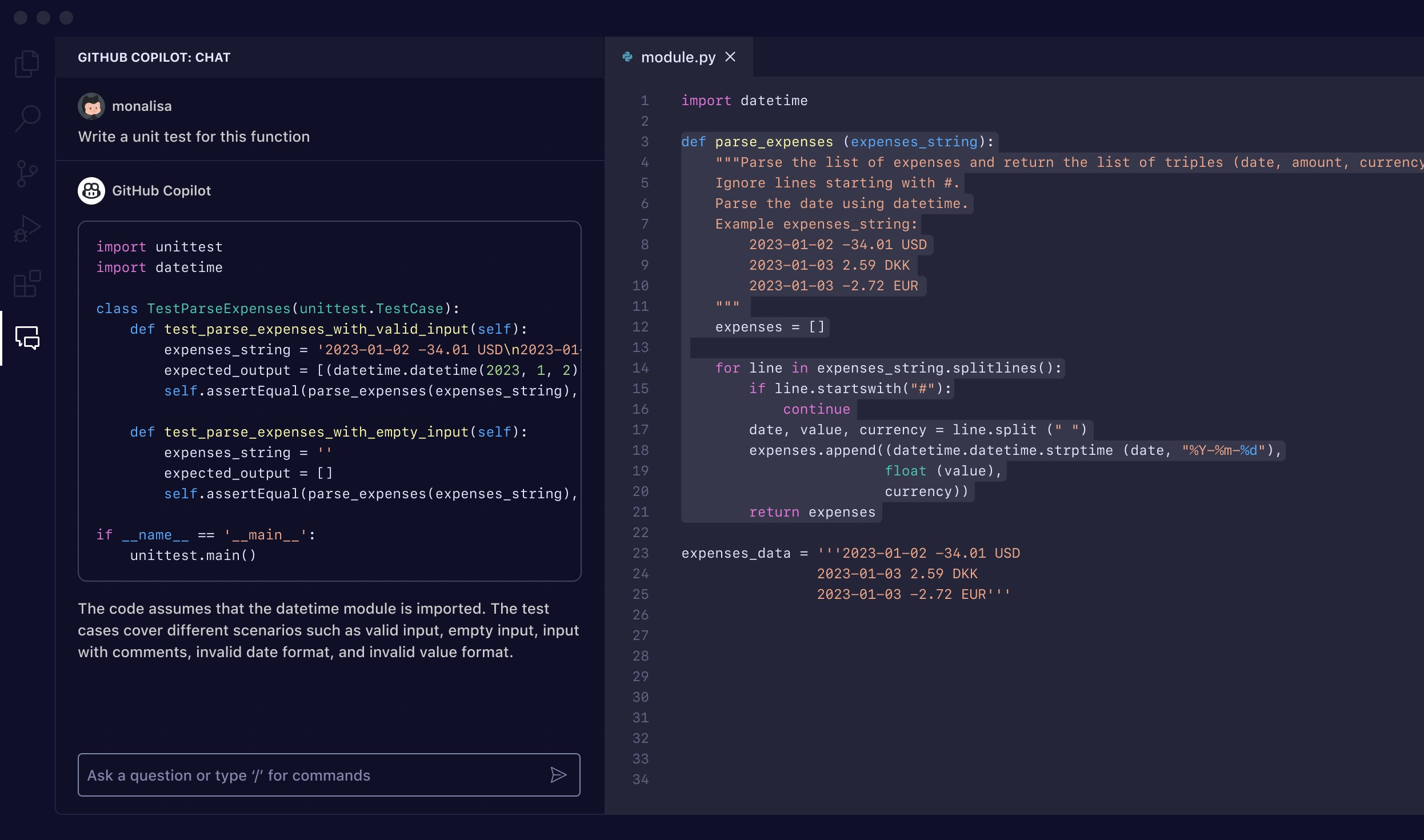

AI also creates mountains of code! Research has found that GitHub Copilot code has poor maintainability and prefers “brainless rewriting” rather than refactoring and reusing existing code.

Link: https://news.miracleplus.com/share_link/17029

Programmers use AI to help write code and say it is good, but is the code quality really reliable? The results may surprise you. A company called GitClear analyzed more than 150 million lines of code in the past four years and found that with the addition of the GitHub Copilot tool, the code churn rate (that is, the code was reworked, modified or deleted shortly after being written) has increased significantly. : It will be 7.1% in 2023, compared with only 3.3% in 2020, which is double.

OpenAI Chairman Bret Taylor’s AI company valued at $1 billion! Sequoia America leads the investment, focusing on enterprise solutions

Link: https://news.miracleplus.com/share_link/17030

Bret Taylor, former co-CEO of Salesforce, founded a software company that developed Quip, a cloud-based word processor and spreadsheet application, which was sold to Salesforce in 2016 for approximately $750 million. Last year, Taylor joined the OpenAI board as chairman and helped reappoint ousted CEO Sam Altman in a deal with the ChatGPT maker’s former board. Foreign media expect Taylor’s role to be temporary so that he can return to his company. Sierra, an AI startup co-founded by Bret Taylor and Clay Bavor, a former Google executive who led Google’s AR/VR efforts, is raising a new round of funding.

Rubbing a large MoE model from scratch, a master-level tutorial is here

Link: https://news.miracleplus.com/share_link/17031

https://huggingface.co/blog/AviSoori1x/makemoe-from-scratch

The legendary “magic weapon” of GPT-4 – the MoE (Mixed Experts) architecture, can be used by yourself! A machine learning guru on Hugging Face shared how to build a complete MoE system from scratch. This project is called MakeMoE by the author and details the process from attention construction to the formation of a complete MoE model. According to the author, MakeMoE was inspired by and based on the makemore of OpenAI founding member Andrej Karpathy. makemore is a teaching project for natural language processing and machine learning, intended to help learners understand and implement some basic models. Similarly, MakeMoE also helps learners gain a deeper understanding of the hybrid expert model through a step-by-step building process.

Open source PixArt-delta image generator: outputs high-resolution AI images in half a second

Link: https://news.miracleplus.com/share_link/17010

The article introduces PixArt-δ, an advanced text-to-image synthesis framework jointly developed by researchers from Huawei’s Noah’s Ark Laboratory, Dalian University of Technology, Tsinghua University, and Hugging Face. PixArt-δ integrates Latent Consistency Model (LCM) and ControlNet to significantly improve inference speed and can generate high-quality images of 1024 x 1024 pixels in only 0.5 seconds, which is seven times faster than its predecessor PixArt-α. In addition, PixArt-δ’s ControlNet module is specifically designed for Transformer-based models, allowing more precise control in text-to-image diffusion models while maintaining high-quality image generation. The researchers have released the weights of the ControlNet version of PixArt-δ on Hugging Face.