December 15th Big Model Daily Collection

[December 15th Big Model Daily Collection] DeepMind paper published in Nature: A problem that has troubled mathematicians for decades, a big model found a new solution; GPT-2 can supervise GPT-4, Ilya takes the lead in OpenAI Super Alignment’s first paper is here : AI aligns AI to obtain empirical results; Nvidia’s strong rival is here! Intel predicts that Gaudi 3 will surpass H100 and release a new generation of AI data centers and PC chips; OpenAI says super artificial intelligence may appear within ten years and will provide $10 million to solve alignment problems

DeepMind paper published in Nature: A problem that has troubled mathematicians for decades, a large model finds a new solution

Link: https://news.miracleplus.com/share_link/13353

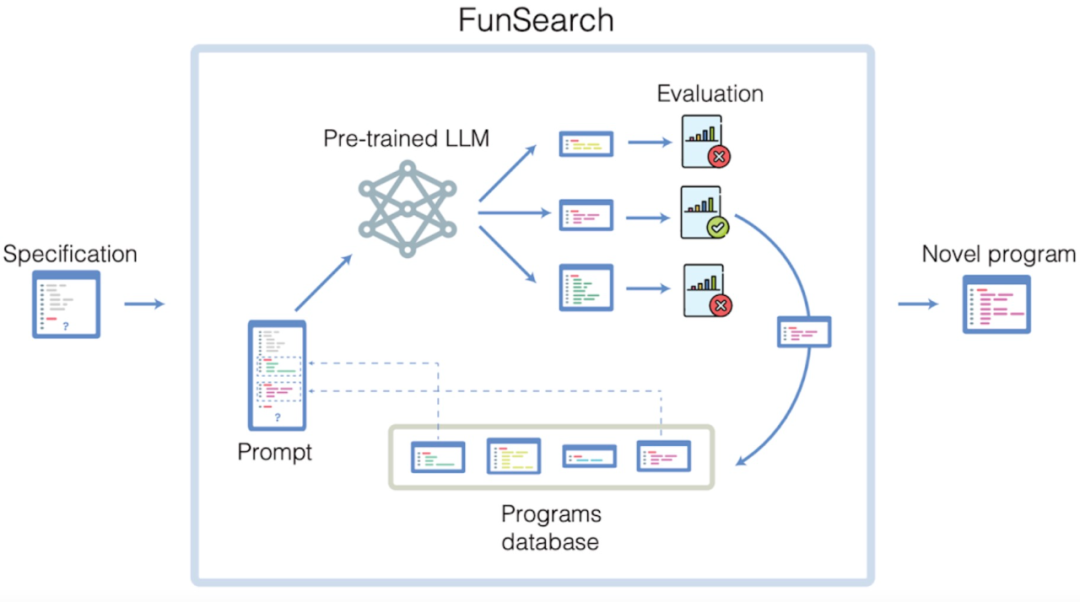

As the top trend in the AI circle this year, large language models (LLM) are good at combining concepts and can help people solve problems through reading, understanding, writing and coding. But can they discover entirely new knowledge? Using LLM to make verifiably correct discoveries is a challenge since LLM has been shown to suffer from the “hallucination” problem, which is the generation of information that is inconsistent with the facts. Now, a team of researchers from Google DeepMind has proposed a new way to search for solutions to mathematics and computer science problems – FunSearch. FunSearch works by pairing a pre-trained LLM (which provides creative solutions in the form of computer code) with an automatic “evaluator” to prevent hallucinations and wrong ideas. By iterating back and forth between these two components, the initial solution evolves into “new knowledge.” A related paper was published in the journal Nature.

NeurIPS 2023|Real, controllable, and scalable, the autonomous driving lighting simulation platform LightSim is new

Link: https://news.miracleplus.com/share_link/13354

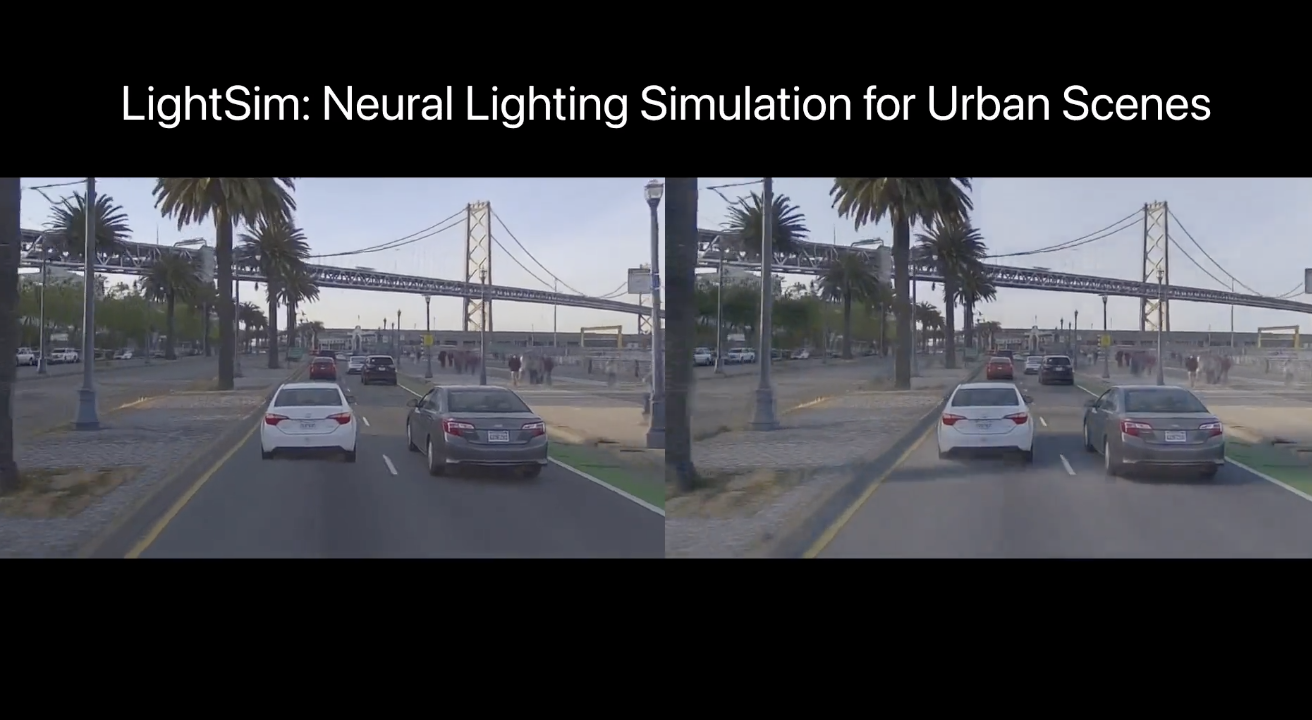

Recently, researchers from Waabi AI, University of Toronto, University of Waterloo and MIT proposed a new autonomous driving lighting simulation platform LightSim at NeurIPS 2023. Researchers have proposed methods to generate paired illumination training data from real data, solving the problems of missing data and model migration loss. LightSim uses neural radiation fields (NeRF) and physics-based deep networks to render vehicle driving videos, achieving lighting simulation of dynamic scenes on large-scale real data for the first time.

GPT-2 can supervise GPT-4, Ilya takes the lead in OpenAI Super Alignment’s first paper is here: AI alignment AI achieves empirical results

Link: https://news.miracleplus.com/share_link/13355

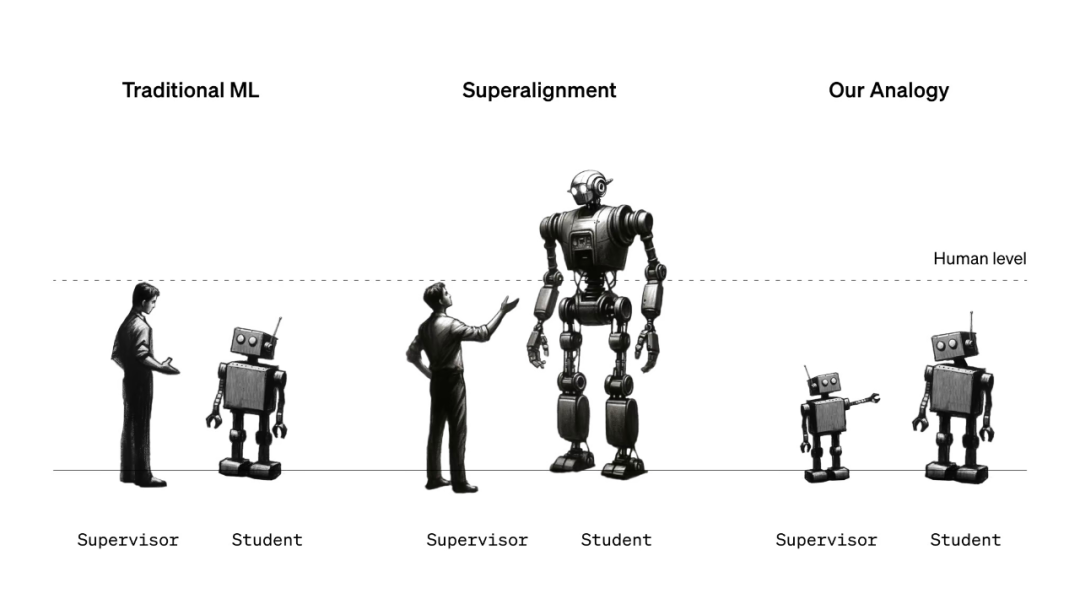

In a recent interview, OpenAI chief scientist Ilya Sutskever boldly predicted that if the model can predict the next word well, it means that it can understand the profound reality that led to the creation of the word. This means that if AI continues to develop on its current path, perhaps in the near future, an artificial intelligence system that surpasses humans will be born. Once artificial intelligence surpasses humans, how should we supervise artificial intelligence systems that are much smarter than ourselves? Will human civilization eventually be subverted or even destroyed? Even academic giants like Hinton are pessimistic about this issue – he said that he has “never seen a case where something with a higher level of intelligence was controlled by something with a much lower level of intelligence.” Just now, the OpenAI “Super Alignment” team released its first paper since its establishment, claiming to have opened up a new research direction for empirical alignment of superhuman models.

Completing 3D scenes with 2D images, Google releases NeRFiller

Link: https://news.miracleplus.com/share_link/13356

In many 3D scene captures, certain parts of the scene are often missing due to mesh reconstruction failure or lack of observation, for example, object contact areas or hard-to-reach areas. Researchers from Google and the University of California, Berkeley, proposed the NeRFiller framework, which can repair incomplete 3D scenes from 2D images. They also found that when the images form a 2×2 grid, more 3D consistent repair effects will be generated. The test data shows that the researchers compared the original data and the reconstruction effect through multiple evaluation indicators, such as PSNR, SSIM, etc. At the same time, the time consumption of each iteration cycle of different data sets was recorded, and it was found that the NeRFiller reconstruction effect was better and the reconstruction efficiency was improved by about 10 times.

Classification performance improved by 10%, the CUHK team used a large protein language model to discover unknown signal peptides

Link: https://news.miracleplus.com/share_link/13357

Signal peptides (SPs) are critical for targeting and transferring transmembrane and secretory proteins to the correct location. Many existing computational tools for predicting SP ignore the extreme data imbalance problem and rely on additional group information of proteins. Researchers at the Chinese University of Hong Kong developed the Unbiased Organism-agnostic Signal Peptide Network (USPNet), a deep learning method for SP classification and cleavage site prediction. Extensive experimental results show that the classification performance of USPNet is significantly improved by 10% compared with previous methods. USPNet’s SP discovery pipeline is designed to explore never-before-seen SPs from metagenomic data.

Shanghai Jiao Tong University team uses deep learning for motor assessment to promote early screening of cerebral palsy

Link: https://news.miracleplus.com/share_link/13358

The Prechtl Global Movement Assessment (GMA) is increasingly recognized for its role in assessing the developmental integrity of the nervous system and predicting motor dysfunction, particularly in conditions such as cerebral palsy (CP). However, the need for trained professionals has hindered the adoption of GMA as an early screening tool in some countries. In the latest study, researchers from Shanghai Jiao Tong University proposed a deep learning-based movement assessment model (MAM) that combines infant videos and basic features, aiming to automate GMA during the fussy movement (FM) stage. MAM demonstrated strong performance, achieving an area under the curve (AUC) of 0.967 during external validation. Importantly, it strictly follows the principles of GMA and has strong interpretability, as it can accurately identify FM in videos, which is basically consistent with expert evaluation. Using the predicted FM frequency, a quantitative GMA method was introduced, whose AUC reached 0.956, improving the diagnostic accuracy of GMA novice doctors by 11.0%. The development of MAM has the potential to significantly simplify early CP screening and revolutionize the field of quantitative video-based medical diagnosis.

Recruitment for internal testing of Flyme AI function opens today: covering Meizu 21/20 series models, with a total of 1,000 places

Link: https://news.miracleplus.com/share_link/13359

Meizu has previously announced the launch of internal testing recruitment for Flyme 10.5, and today officially launched internal testing recruitment for Flyme AI functions. The models covered are Meizu 21, Meizu 20, Meizu 20 PRO, and Meizu 20 INFINITY Unbounded Edition. Registration version requirements: Flyme 10.23.12.14 daily.

People familiar with the matter: OpenAI discusses embedding GPT-4V into Snapchat’s smart glasses

Link: https://news.miracleplus.com/share_link/13360

OpenAl recently discussed embedding its GPT-4 with Vision multi-modal large model (object recognition) into products of the Snapchat parent company, which could bring new capabilities to Snap’s Spectacles smart glasses, according to a person familiar with the matter.

Nvidia’s rival is here! Intel predicts that Gaudi 3 will surpass H100 and release a new generation of AI data center and PC chips

Link: https://news.miracleplus.com/share_link/13361

Intel is working hard to catch up with Nvidia and seize the market in the field of artificial intelligence (AI) with new products. On Thursday, December 14th, Eastern Time, Intel announced a series of new AI products during the company’s AI Everywhere event, including fifth-generation Xeon processors for enterprises and Xeon processors for personal computers (PCs). Core Ultra chip. At the same time, Intel CEO Pat Gelsinger publicly introduced the third-generation Intel AI accelerator Gaudi 3 for the first time, which is used for deep learning and large-scale generative AI models. Intel plans to release Gaudi 3 next year, saying that the performance of Gaudi 3 will be better than Nvidia’s flagship AI chip H100. As demand for generative AI solutions continues to increase, Intel expects to capture a larger share of the accelerator market next year with its AI accelerator suite led by Gaudi.

OpenAI says super artificial intelligence could emerge within a decade, will provide $10 million to solve alignment problems

Link: https://news.miracleplus.com/share_link/13362

OpenAl believes that artificial intelligence systems with superhuman abilities in all fields, called superintelligence, may emerge within the next 10 years. A system with such power would bring amazing benefits, but also potential risks if not developed carefully. It is against this background that OpenAl, in partnership with Eric Schmidt (former Google CEO), launched the “Superalignment Fast Grants” project to invest $10 million in research dedicated to the coordination and safety of these future superhuman artificial intelligence systems.